Visit any news site on the Web and you will be hard pressed not to find numerous stories about Artificial Intelligence (AI) and Large Language Models (LLMs), a type of AI system that is trained on large amounts of text data. The headlines range from “MIT Report: AI can already do the work of 12% of America's workforce,” to “Is AI Dulling Our Minds?,” to “The Rise of ‘Vibe Hacking’ Is the Next AI Nightmare.”

The topics of AI and LLMs are being discussed just as much on the Dark Web, as the Clear Web, according to eSentire’s cybersecurity research team, the Threat Response Unit (TRU). The Dark Web is full of Underground Hacker Forums and virtual Hacker Markets where hundreds of cybercriminals are discussing the tactics, tools and procedures (TTPs) for carrying out every type of Internet crime imaginable, as well as selling the illicit goods and services to conduct those crimes.

The threat actors are selling malware, online banking credentials, fake passports, stolen identities (a.k.a. fullz), high-limit credit cards, subscriptions to Phishing Services, Malware Services, and the list goes on. And of course, they are selling access to the technology that has taken the world by storm–LLMs. TRU found hackers on the Underground selling access to ChatGPT, Perplexity and Gemini subscriptions.

“When you look at these Underground markets, you see two clear motives for buying access to AI accounts,” said Alexander Feick, AI Security expert and VP of Labs at eSentire and the author of On AI and Trust. “First, a lot of hacker crews just want LLM tools. They use mainstream LLMs the way everyone else does—research, translation, drafting emails, debugging scripts—only they’re pointing that capability at phishing, reconnaissance, and basic tooling for their cybercrime operations. The attacker still makes the decisions; the LLM simply reduces the cost and time per task so they can run more threat campaigns with fewer skilled people.”

“Second, there’s the data angle,” continued Feick. “A stolen ‘power user's' account credentials can expose years of prompts, files, and integrations that may include sensitive corporate work. If that turns out to be the case, then the threat actor has access to valuable corporate data from which to launch any number of scams against the power user and the company they work for. This could include targeted phishing attacks, extortion campaigns, Intellectual Property theft, and more. Today that might only be access to chat logs and a few connectors. But as more businesses move from ‘we use AI’ to ‘AI is wired into how we operate,’ that same account can become a remote control for an entire ecosystem. The core risk doesn’t change—the blast radius does."

Need an AI Infusion into Your Cybercrime Operation? Hackers have Your AI Needs Covered and at the Right Price

Want access to a ChatGPT Pro or ChatGPT Plus Account but can’t afford it? Not a problem, the hackers on the Underground have prices to suit almost anyone’s budget this holiday season.

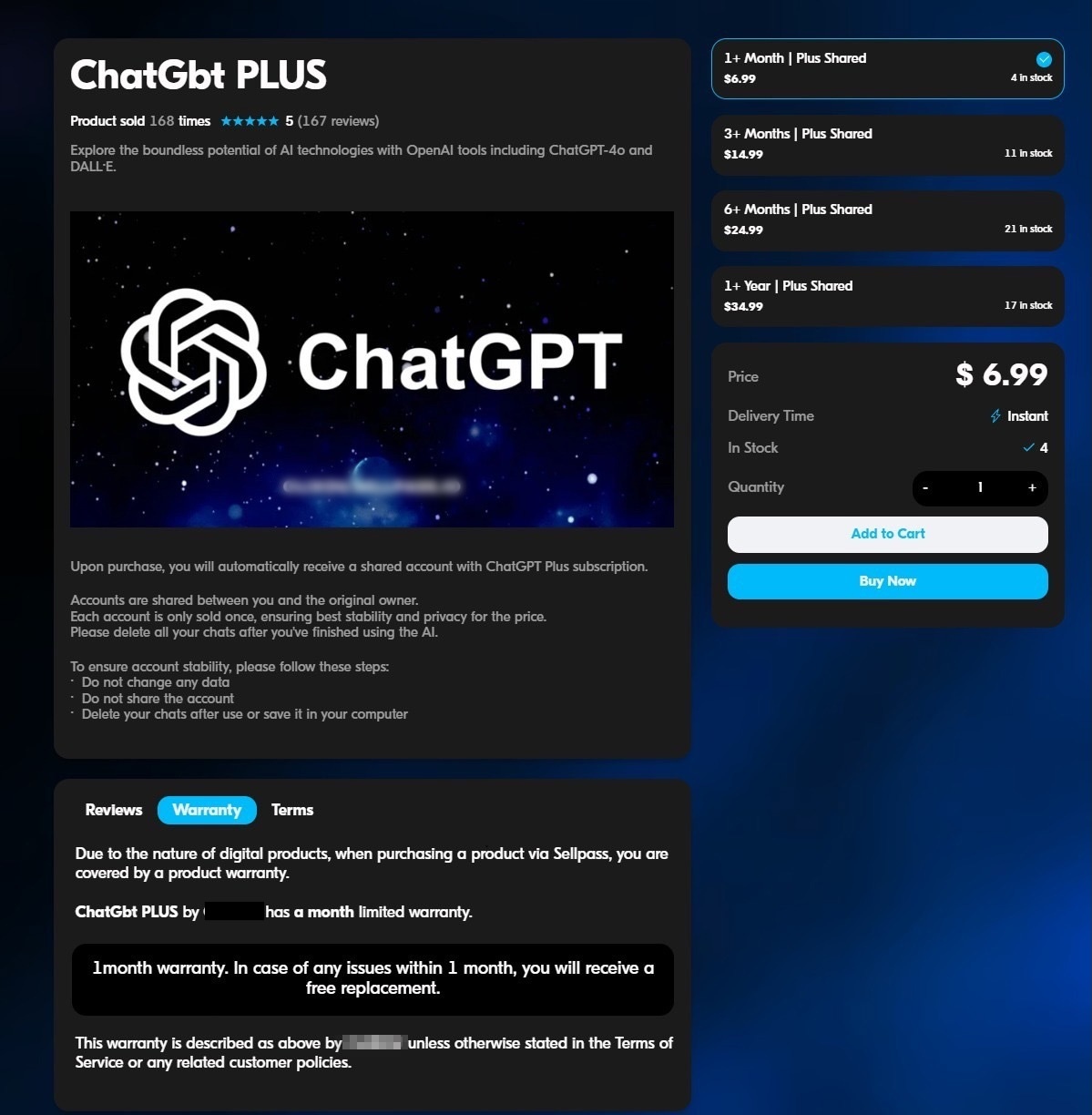

TRU saw one threat actor, in the Underground Markets, renting “shared” access to ChatGPT Plus accounts. According to the ad, rental prices started at $6.99 for one month, $14.99 for three months, $24.99 for six months and $34.99 for one year (Figure 1).

What does “shared access” mean? The ad explained that the customer/renter would be sharing the ChatGPT Plus account with the original owner. However, the account would only be sold once “ensuring best stability and privacy for the price.”

The ad also recommended that customers follow these tips to ensure stability of the ChatGPT account:

- Do not change any data

- Do not share the account

- Delete your chats after use or save them to your computer.

Ironically, the seller posting these ads advertises that customers receive a “limited-service guarantee” with their purchase. The ad reads: “1 month warranty”. In case of any issues within 1 month, you will receive a free replacement.”

Thus, if a buyer purchases a three-month rental of a “shared” ChatGPT Plus account and within the first month, problems arise then the seller will provide the customer with a new “shared” ChatGPT Plus account (Figure 1).

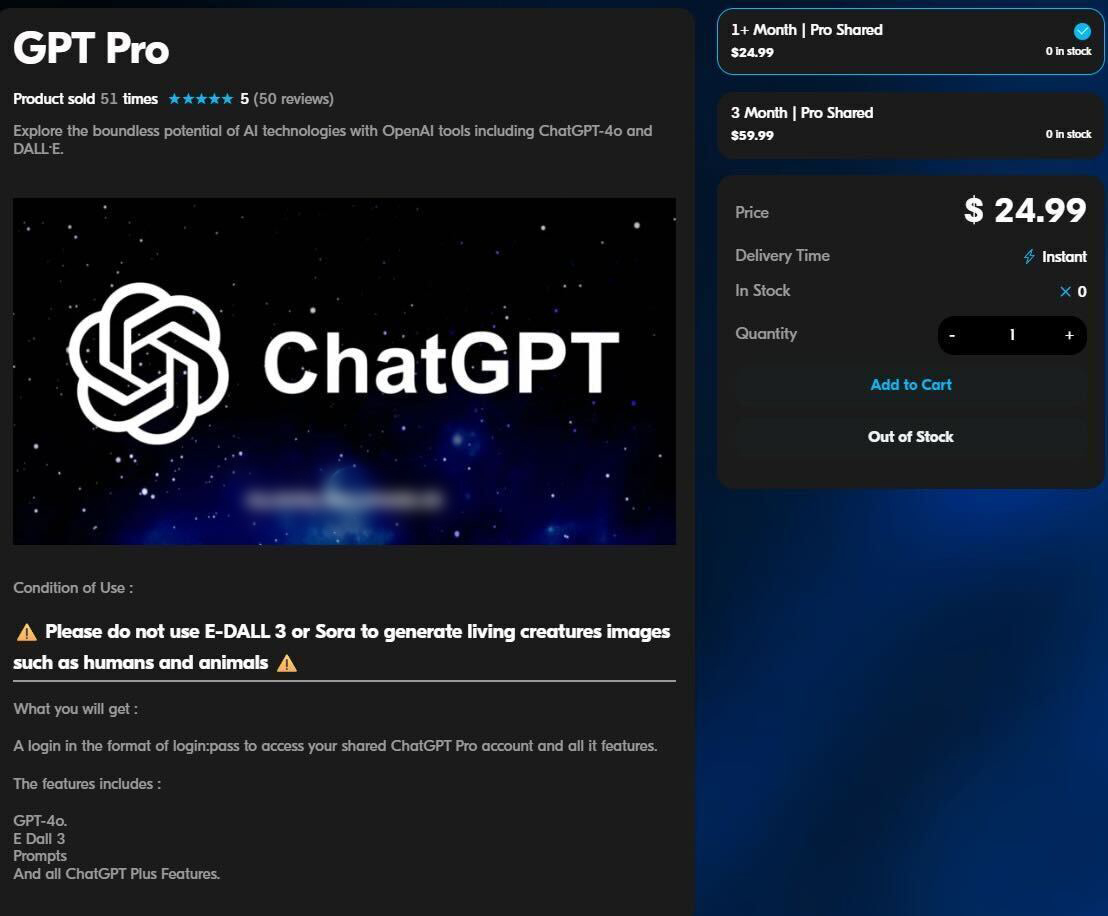

Interestingly, the seller also advertised “Shared Access” to ChatGPT Pro accounts for $24.99 for one month or $59.99 for three months. However, as of December 1, 2025, the seller indicated in their ad that “shared ChatGPT Pro accounts” were out of stock (Figure 2).

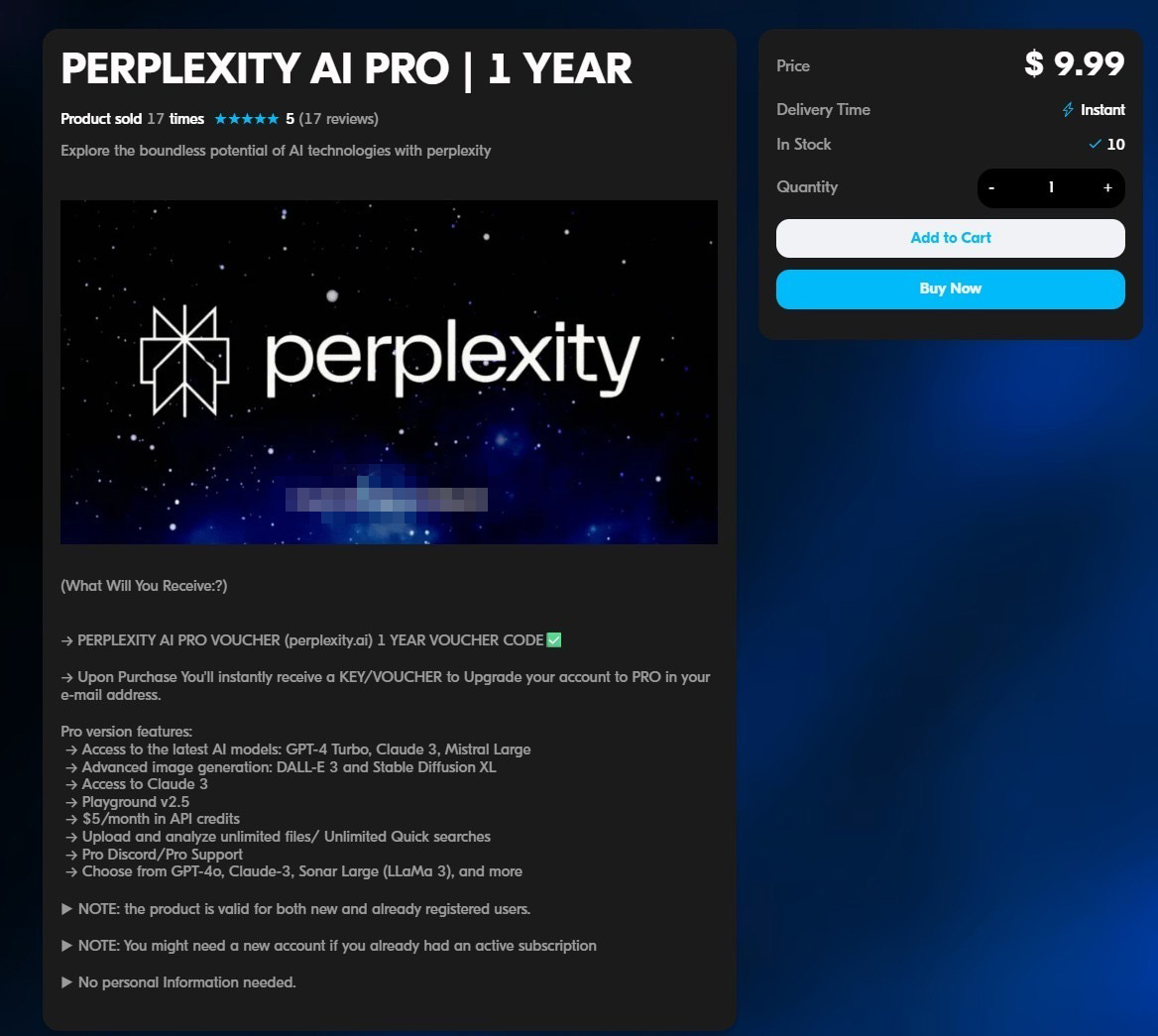

The same threat actor renting “shared” ChatGPT Plus accounts was also advertising one year subscription vouchers for Perplexity AI Pro, for only $9.99. The ad explained that buyers would receive a key/ voucher code in their email as soon as their payment was processed, and they would immediately be able to upgrade their Perplexity AI account to AI Pro status.

For cybercriminals needing a powerful LLM development environment this offer should be very enticing (Figure 3). According to Perplexity’s website, an annual subscription to Perplexity AI Pro runs $200. Subscribers get unlimited file uploads and unlimited use of ChatGPT-5 and Claude Sonnet 4.5, as well as access to new products. Subscribers also get over 300 “Pro searches" daily and access to Perplexity Labs and Research with no volume restrictions, as well as priority support (Figure 3).

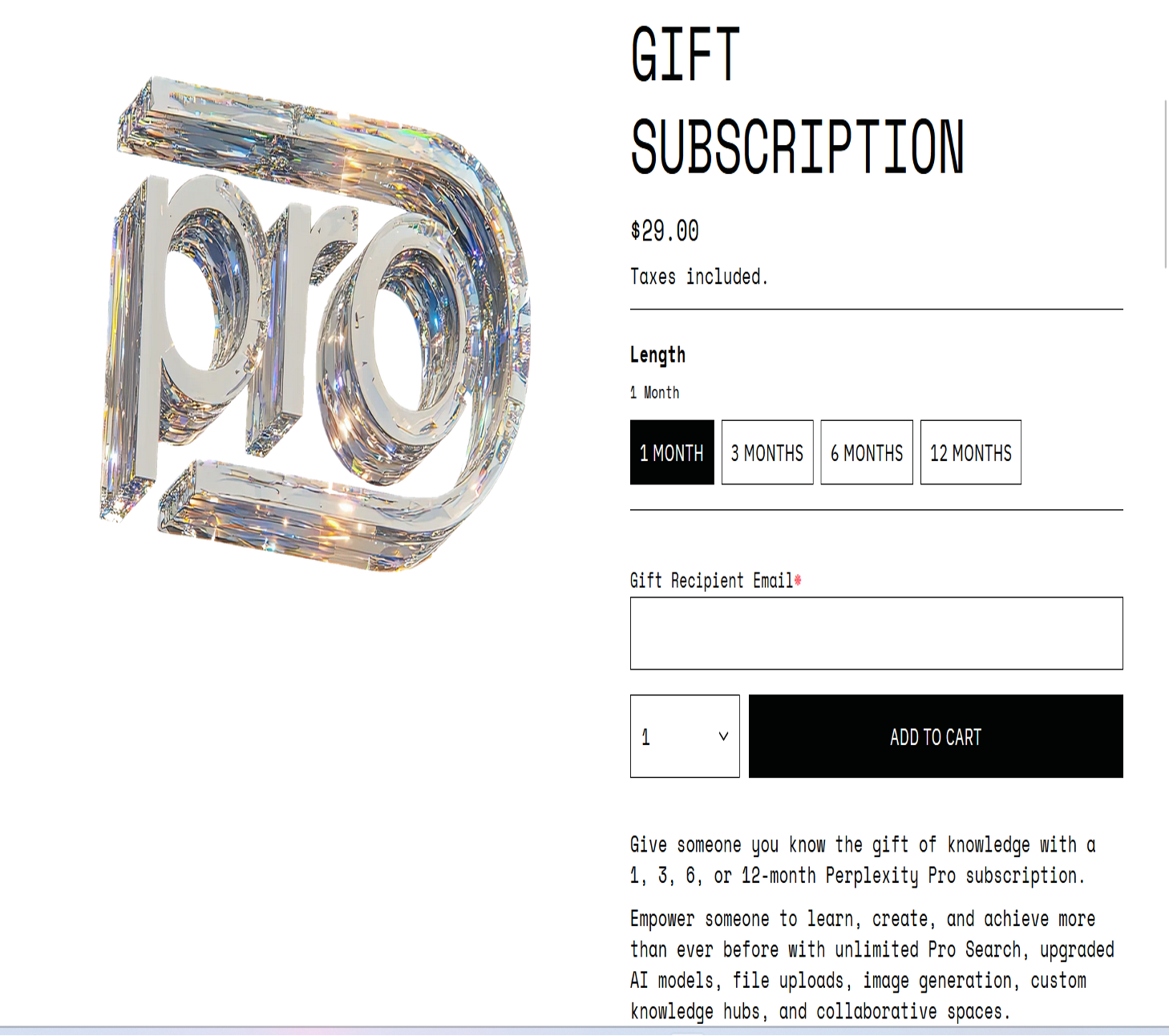

The seller claims to have sold 17 of these vouchers thus far and maintains a rating of 5 stars. Readers might wonder how a threat actor goes about getting their hands on these subscription vouchers. Well, it is quite easy. Perplexity sells gift Pro subscriptions for 1, 3, 6, or 12 months on their website (Figure 4).

Thus, the threat actor/seller goes to Perlexity’s website, purchases a one-year gift subscription for their customer, enters the customer’s email address into the section of the website labeled” gift recipient email” and the voucher, containing a link or code to redeem the subscription, is sent directly to the customer.

You Don’t Want to Share Your New ChatGPT or Google AI Account? Not a Problem

There are threat actors that want to upgrade their ChatGPT subscription, but don’t want to pay OpenAI $200 a month for the Pro plan, $20 a month for the Plus plan or $4.50 a month for the Go plan. Plus, there are those who also do not want to share their ChatGPT account with another user. TRU found several sellers on the Underground Markets willing to accommodate those terms.

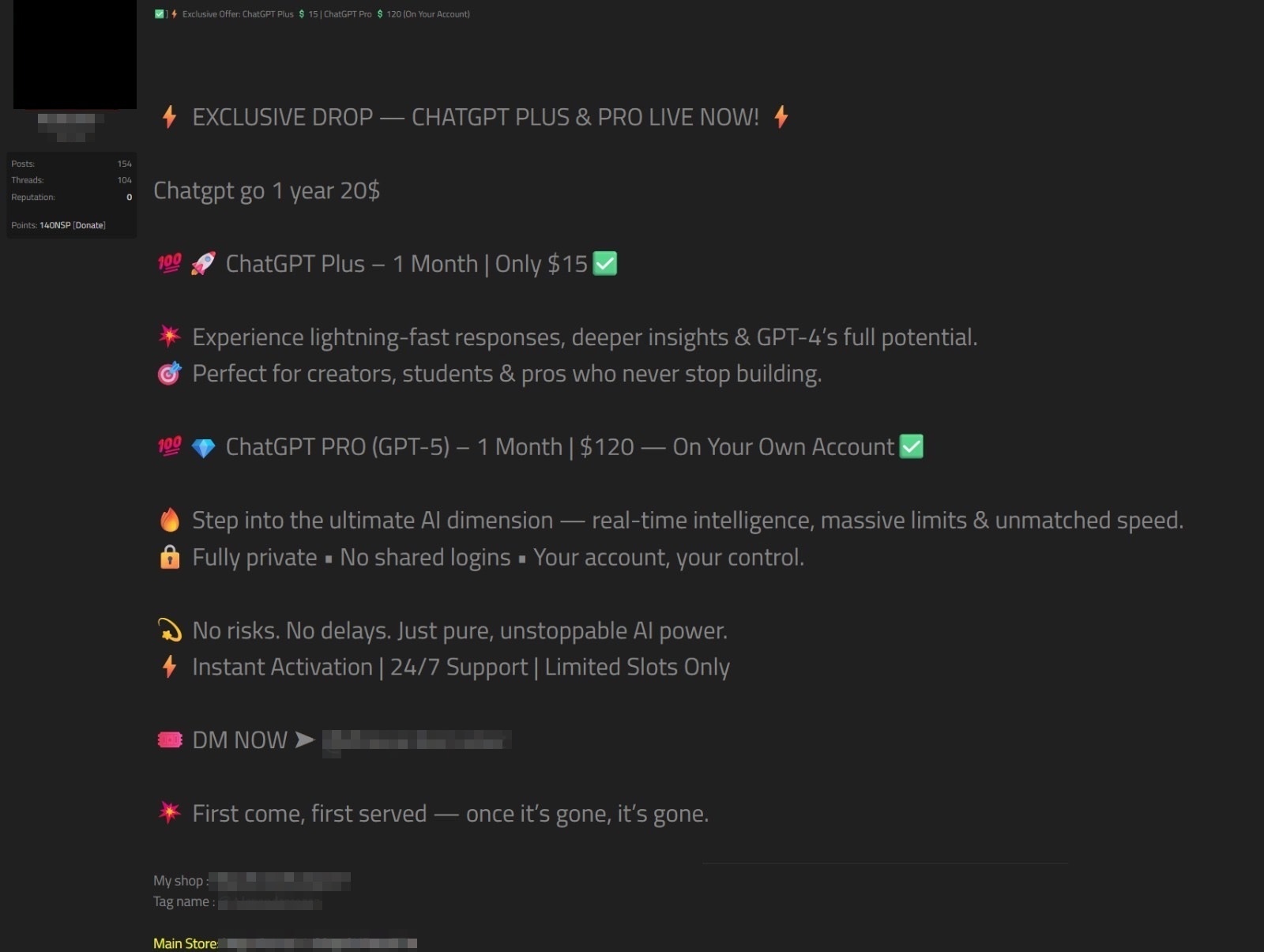

One such merchant was offering an enticing price point. If a ChatGPT account holder wants to be upgraded to ChatGPT Pro for one month, it will cost them $120, as opposed to $200. If an account holder wants to be upgraded to ChatGPT Plus for a month it will be $15, as opposed to $20, and if any users of the free ChatGPT service want to be upgraded to ChatGPT Go for a year, they will pay $20, as opposed to $54. Note: OpenAI currently only sells Go subscriptions in India and Indonesia.

Once a customer determines what type of “upgrade” they want, the transaction would proceed as follows. The customer gives the seller their email they used to establish the account and the password, and the seller goes into the account, upgrades the account with a credit card and then it is done. TRU believes it's probable that these sellers are leveraging stolen credit cards.

As one can see from the threat actor’s advertisement (Figure 5), the tone of the ad is extremely enthusiastic as they describe the functionality one will get with an upgrade to ChatGPT Pro: “Step into your ultimate AI Dimension—real time intelligence, massive limits & unmatched speed.”

The seller also emphasizes that their service is totally private, with the ad stating: "Fully Private—No shared logins—Your account, your control."

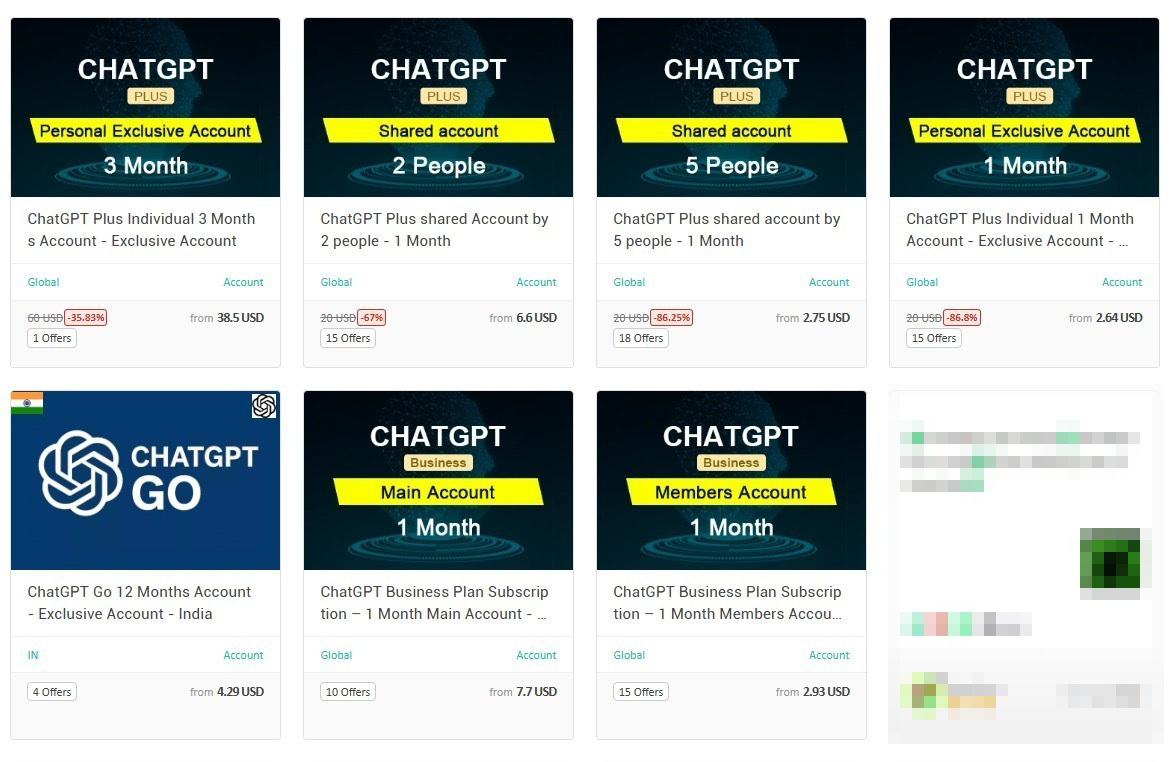

TRU discovered another threat actor selling “exclusive” and “shared” ChatGPT Plus accounts, in addition to several ChatGPT Business accounts. The threat actor had an offer where a buyer could purchase access to a Plus account that would be shared between 5 people (Figure 6).

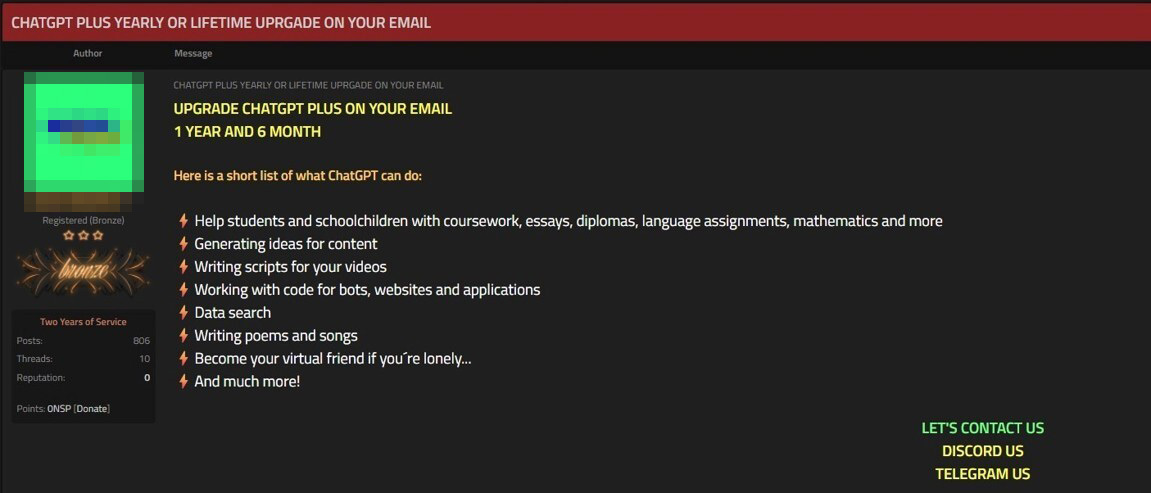

TRU discovered another threat actor selling ChatGPT account holders the opportunity to upgrade their subscription to a ChatGPT Plus account and the account would be private (Figure 7).

Interestingly, the advertiser highlights the many different things a ChatGPT Plus account can do including:

TRU had to wonder what parents would be on the Underground Hacker Markets and Forums looking to purchase a ChatGPT subscription to help their child with their school assignments? However, the most amusing statement in the ad was when the seller lists that a “ChatGPT Plus account can “become your virtual friend if lonely.”

When TRU contacted the seller to get the cost for the upgrade, they found that the threat actor was also selling upgrades to a ChatGPT Pro subscription, which interested the TRU researchers because of the extensive capabilities and the tools which a Pro account provides to a subscriber. The cost was $120 for one month’s access to ChatGPT Pro. TRU assesses a cybercriminal looking to purchase access to a “private” ChatGPT Pro account-would find a price tag of $120 for a month’s usage, which is quite desirable.

The seller also offered a slight variation of the plan. If a buyer was willing to use a ChatGPT Pro account that the seller had established, then they would only be charged $80 for one month’s use of ChatGPT Pro. Of course, this meant that the seller could log into the ChatGPT Pro account at any time, and see what the customer was working on, unless the customer consistently deleted their chats.

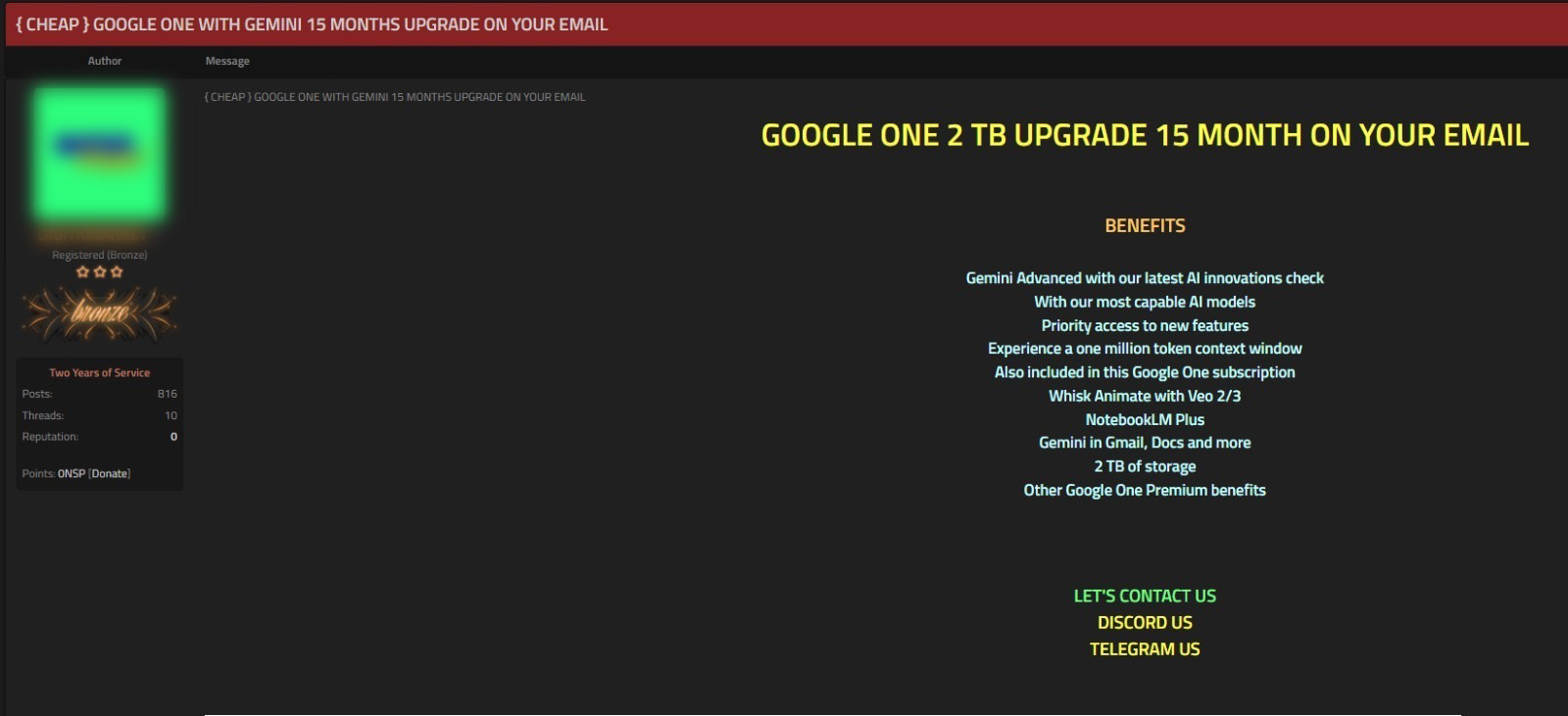

The seller in Figure 7 was also advertising upgrades from a Google One subscription to a 15-month Google AI Premium subscription, now called Google AI Pro (Figure 8). Google advertises that Google AI Pro gives subscribers access to Gemini 3 Pro, Deep Research and image generation, video generation with Veo 3.1 Fast3, Flow 4—Google’s AI filmmaking tool, 1,000 monthly AI credits across Flow and Whisk, and an array of other benefits.

Although the seller does not list the price for this 15-month upgrade in their ad, TRU found out it was $35. Google currently charges $26.99 per month for AI Pro. So, essentially a buyer would get a 15-month subscription to Google AI Pro for $35 as opposed to $404.85.

Feick believes that the fraudsters on the Underground Markets who are purchasing “shared” ChatGPT Plus accounts want these accounts because they essentially expect to be burning them regularly.

“If you are performing phishing and other illegal activities from these accounts, the AI providers are watching and trying to close them down,” said Feick. “So, as a hacker you want to buy a bunch of accounts because you know every few weeks your account is going to be shut down and deactivated for using it outside the terms of use. But you don’t care, because you aren’t paying a lot for it, and you can just move to the next account.”

Whereas, those threat actors wanting to upgrade to a “private” ChatGPT Pro, Google AI Pro or some other “Pro-Level” account are “power users.” They are skilled in software development and are very serious about improving their cybercrime operation.

"The value of higher-tiered LLM subscriptions, like ChatGPT Pro, Google AI Pro and others, isn’t that they suddenly unlock ‘Hollywood-grade’ cyberattacks. It’s that they give the threat actor more capacity: longer context windows, faster responses, higher usage limits, and a better toolchain," said Feick. “Essentially LLMs are helping the adversaries translate and create more personalized phishing campaigns at scale, clean up sloppy scripts on the fly, refactor commodity malware components, and generate the documentation and playbooks that keep an attack campaign organized,” continued Feick.

Last month, Google’s Threat Intelligence Group (GTIG) reported they had discovered state-sponsored threat actors from North Korea, Iran and the People’s Republic of China, misusing AI to improve their cyber operations, from creating phishing lures to reconnaissance, to the extraction of data.

Anthropic also discovered state-sponsored hackers from China using AI. Anthropic, the makers of the LLM Claude, issued a report in November detailing their discovery of threat actors, sponsored by the Chinese government, using Claude to perform automated cyberattacks against approximately 30 global organizations.

One might ask, “why don’t the companies behind the popular LLMs prevent threat actors from using their models to further advance cybercrime? “

“Mainstream models do ship with guardrails, and they block a lot of abuse,” said Feick. “However, guardrails aren’t a wall. On the underground, you see two patterns over and over. One is endless experimentation with jailbreak prompts against popular models. The result—people trading techniques that sometimes produce usable phishing copy or code snippets, but sometimes these prompts just produce noisy output that needs work.”

“The other is the rise of purpose-built criminal LLMs that strip away most of those safety checks,” continued Feick. “Hacker crews will often prototype and iterate phishing content, malware components, or social-engineering scripts in these ‘dark’ models, then bring the polished output back into their regular tooling. In a world where you let LLM agents drive other tools—send emails, open tickets, touch data sources—the combination of jailbreaks, malicious LLMs, and highly connected agentic accounts can turn a single compromise into an automated attack engine. We’re not there at scale yet in most enterprises, but the trajectory is clear.”

Show Me the Money

At the beginning of the blog, TRU showed how one threat actor was selling access to “shared” ChatGPT Plus and ChatGPT Pro accounts for anywhere between $6.99 to $59.99 depending on how long the customer rented access and whether it was a ChatGPT Plus or Pro account. This threat actor was also selling one-year subscriptions to Perplexity AI Pro for $9.99. So, what kind of return can this cybercriminal potentially make?

TRU cannot determine how many ChatGPT account subscriptions the threat actor has actually sold, although they say in their ad (Figure 1) they have sold access to 168 ChatGPT Plus accounts, 51 ChatGPT Pro accounts (Figure 2) and 17 Perplexity Pro accounts (Figure 3). What TRU can potentially estimate is how much the threat actors might be paying for these LLM accounts and LLM upgrades. TRU believes it's probable that the sellers, highlighted in the blog, are leveraging stolen credit cards to buy the LLM accounts and the upgrades or they are getting access to LLM account credentials found in stealer logs.

Cybercriminals can purchase a cache of stolen credit cards on the Underground for anywhere between $5 and $20 per card. The price varies depending on the card’s credit limit, issuing bank and country of origin. Thus, if the threat actor spent $10 on a stolen credit card to purchase a year’s ChatGPT Plus subscription and can resell “shared access” to it for $34.99, (Figure 1), they have made back three times their investment.

If they spent $10 on a credit card to purchase a year’s subscription to ChatGPT Pro and turned around and sold “shared” access to that account for $59.99, they would have made almost six times their investment.

The same goes for those threat actors selling “private upgrades” to ChatGPT Plus and Pro accounts. The blog profiles two threat actors who are selling upgrades to ChatGPT Pro subscriptions, good for one month, for $120. (Figures 4 and 5). Thus, if the seller spends $20 on a credit card to upgrade a customer’s ChatGPT account to a Pro-Level subscription, for one month, and charges the customer $120 then they have made six times their investment. Certainly, it makes sense that the threat actors would be motivated to sell six- month and one-year upgrades to ChatGPT Plus and upgrades to one-month ChatGPT Pro subscriptions because of the high return they can make.

However, those threat actors who are selling one-month “shared” access to ChatGPT Plus and Pro accounts are clever. They figure there are lots of cybercriminals thinking, “I am about to try something with this Pro or Plus account that I am 80 percent sure is going to get me blocked and then OpenAI will ban my account, so why not try using this throwaway account first—it's cheap and it isn’t registered to me, so why not?”

Stealer Logs—Chock Full of LLM Account Credentials

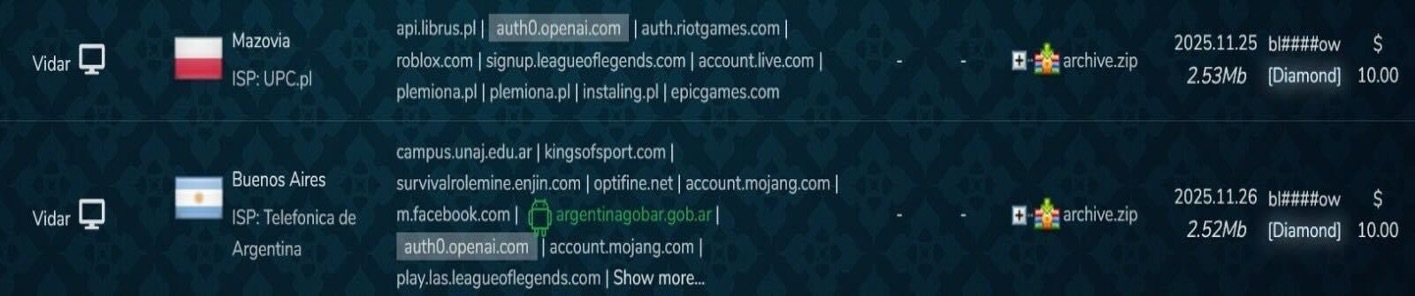

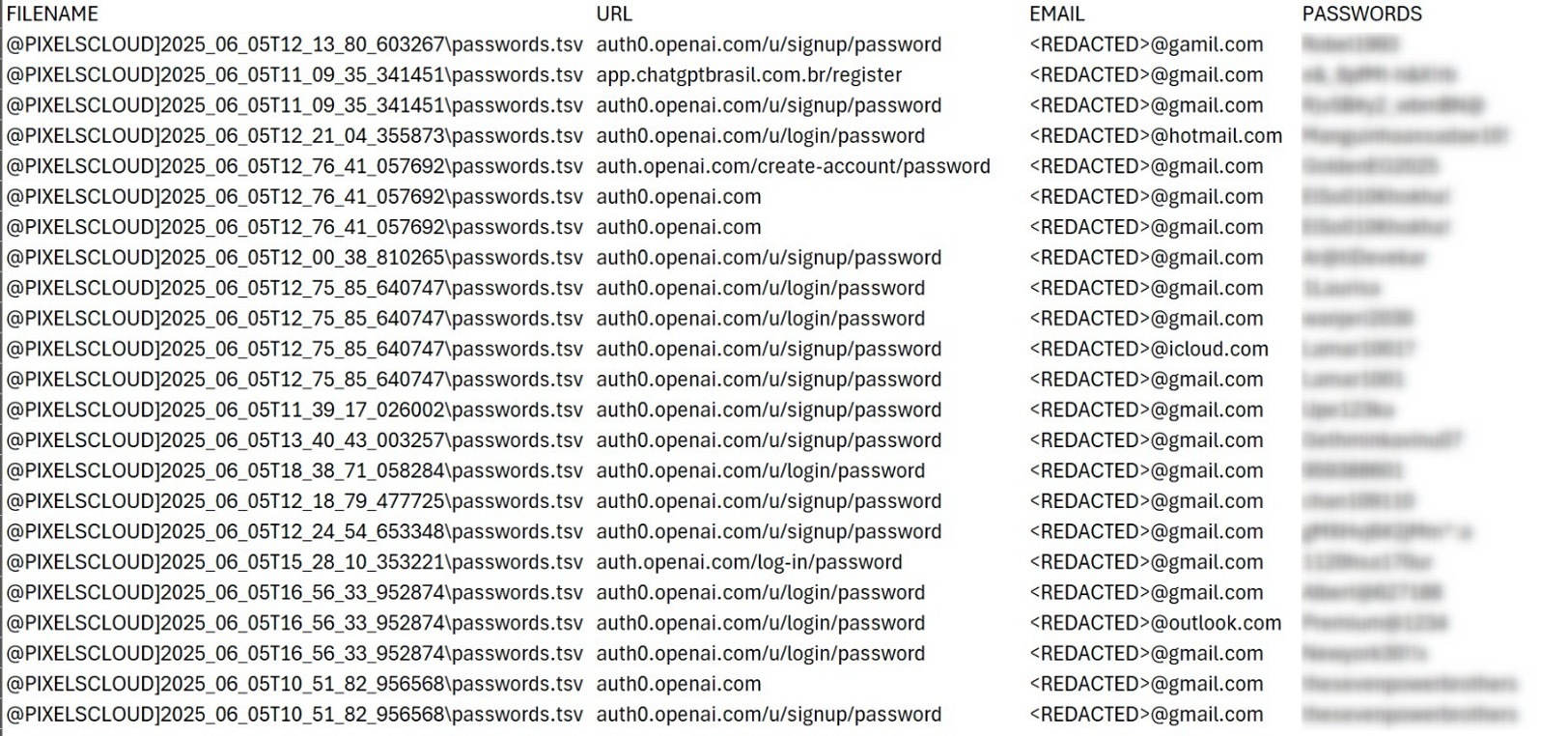

Not only is TRU finding threat actors on the Underground Markets and Forums selling “shared access” and upgrades to LLM accounts, they are also seeing hundreds of “stealer logs” for sale, many of which contain LLM account credentials. A stealer log is a collection of account credentials and sensitive data stolen from a single user’s computer by cybercriminals using infostealer malware.

Infostealers are designed to siphon off data stored in a victim’s web browsers including (usernames and passwords, session cookies, autofill data, credit card numbers), email clients, messaging applications, and cryptocurrency wallets. The usernames and passwords can include login credentials for a victim’s corporate network, online bank accounts, VPNs and LLM accounts.

The theft of a computer user’s session cookies is especially worrisome because these are “active tokens” that a cybercriminal can use to get past an account, where multi-factor authentication protections have been implemented. The active tokens can be used to take over “live” user sessions without a password. Stealer logs range in price but can be purchased for as little as $10 each on markets such as Russian Market.

One of the most popular and prominent Underground Markets for buying and selling stealer logs is Russian Market. It is not only known for having hundreds of stealer logs available; it is also very user-friendly. If a buyer is looking for specific credentials they can filter the logs by specific domains, geography, the vendor, infostealer used, the operating system, etc.

A cybercriminal with credentials for sensitive accounts has the potential to access some very valuable data, especially if they are employee credentials for corporate accounts. It is not surprising that the number of stealer logs being offered for sale on the Underground Markets is increasing every year. In fact, TRU saw infostealer cases involving its clients increase by 30% in 2025.

An example of stealer logs can be seen in (Figure 9 and Figure 10). The screenshots are from Russian Market and they only showing a portion of the credentials available in each stealer log belonging to the four computer victims. Each of the four stealer logs contain credentials for either ChatGPT.com or OpenAI.com. The stealer log for each victim is being sold for $10.

FREE OpenAI Account Credentials—Get’Em While They Last!

It must be the holiday season which inspired a threat actor to give a gift to his fellow cybercriminals. It was a list of OpenAI account credentials. They were posted to an Underground Forum. While researching LLM fraud, TRU came across the list containing logins (email address and password) for 25 separate OpenAI accounts and FOR FREE (Figure 11).

So, what can a hacker do with a set of these credentials? If they are logins for a business account and are able to successfully get into the account, they will gain access to OpenAI’s top models. All the work they do with the models won’t cost them a dime because all the usage will be charged to the credit card belonging to the business that set up the account.

Depending on how many employees of the business are regularly working with the models and at what capacity, it could be months before the business notices an increase in usage. The other plus is this, any nefarious use of the models is more likely to blend in if it is interspersed with model usage which is 100% legitimate, it is “hiding in plain sight."

If a threat actor obtains credentials for an individual’s OpenAI account, it is likely that the account owner is paying attention and the fraudulent activity will get detected sooner than with a business account. However, until that happens the hacker can utilize OpenAI’s models for free because it is getting billed to the account owner’s credit card.

Having access to an OpenAI account is a great deal for a cybercriminal because not only does the actual account owner get stuck with the bill, if the threat actor uses the models for nefarious activity and they know that account is for sure going to get shut down at some point, better to have someone else’s account get banned and not their own.

“Infostealer logs turn every saved password into a line item in a catalogue, and LLM accounts are now just another entry next to banking, VPN, and email credentials,” explained Feick. “When those logs include a corporate LLM account, the risk isn’t limited to ‘someone can send prompts as you.’ Depending on how that employee has been using the tool, an intruder may be able to replay chat histories, pull down uploaded files, and learn how the victim’s organization structures their projects or handles sensitive workflows,” said Feick.

“In a company where AI is still experimental—maybe one SaaS LLM and a few curious users—that’s a painful but bounded problem,” continued Feick. “You’re mostly worried about whatever those early adopters pasted into the chat box. However, in companies that push deeper into ‘AI in the business’—connecting LLMs to knowledge bases, ticketing systems, and line-of-business applications—the same stolen credential can expose not just past conversations but live data flows. And once you let agents act on behalf of users, a single compromised agentic account can move from being an intelligence leak to an active operator inside your environment.”

Why Care about Keeping Your LLM Accounts Safe from Cybercriminals?

Some readers might think, “Why really worry if a cybercriminal is able to find their way into our company’s LLM Account? It is not like they are breaking into the company’s payroll system or customer database or have absconded with the login credentials for one of the company’s IT administrators?”

“For defenders, the lesson isn’t just that AI adds some extra risk,” said Feick. “It’s that AI changes what it means for an identity or login credential to be powerful. A single LLM account might start life as a glorified notepad—useful, but isolated. As soon as you plug that account into email, cloud storage, HR systems, CRMs, code repositories, and scheduling tools, and then give it permission to act on the user’s behalf, you’ve effectively created a new operational role inside your business. And if you’ve got an ecosystem of those accounts connected and able to talk to each other, one compromised account can be used to start a prompt injection chain and compromise a whole ecosystem of LLM agents without needing any other conventional cyberattack techniques. Thus, the blast radius spans every connected system.”

“The good news is that most enterprises aren’t fully there yet,” explained Feick. “Many still have only one or two AI tools in production, often with limited integrations. In that world, treating AI accounts and API keys as high-value identities and extending your existing identity, endpoint, and cloud security controls will cover a lot of ground.”

“But as you move from ‘we allow AI’ to ‘we rely on AI’—especially agentic patterns where tools can take actions, not just generate text—you need to model that ecosystem explicitly,” said Feick. “Which AI identities can do what? Which systems do they touch? What happens if one of them is hijacked? If you don’t answer those questions up front, attackers will eventually answer them for you, using the same credentials you issued to make the business more efficient.”

Four Simple Steps to Keep Your LLM Accounts Secure

The TRU team as well as Alexander Feick, recommend four important but simple steps for keeping your LLM Accounts safe. You can do them individually, but of course they work better if you can mandate them across your company. After all, when it comes to AI Security and Cybersecurity “An Ounce of Prevention is Worth a Pound of Cure.”

1. Turn on strong MFA

Most computer compromises still start with a stolen password. Strong MFA shuts that door, especially when it uses passkeys or hardware keys that can’t be phished. Unexpected MFA prompts should be treated as attempted access, not annoyance. Enforcing strong MFA everywhere—and blocking access when it’s missing—removes one of the simplest and most common failure points.

2. Do not reuse passwords

A reused password turns one breach into many. A password manager solves this by generating long, unique passwords and keeping them out of circulation. When the same password shows up in more than one place, it needs to be changed before it creates a wider incident. Making password managers standard and monitoring for weak or breached credentials eliminates a predictable source of risk.

3. Treat “remember me” like a password

Saved session cookies are effectively login tokens to your online accounts, and attackers target them because they often bypass MFA entirely. Staying signed in on “unmanaged devices” or running unvetted browser extensions expands the attack surface in ways people rarely notice. Logging out on shared machines and curating extensions reduces that attack surface immediately. Requiring re-authentication for sensitive actions and limiting access to “managed devices only” keeps session cookie theft from becoming a quiet entry point.

4. Use work accounts, not personal ones

Personal accounts offer no visibility, no governance, and no clean way to remove access. When business work takes place there, it creates blind spots that are hard to unwind. Company-managed accounts restore control through SSO, clear access boundaries, and reliable off-boarding. Keeping paid or elevated access limited to the roles that need it reduces the potential damage when something goes wrong.

To learn how your organization can build cyber resilience and prevent business disruption with eSentire’s Next Level MDR, connect with an eSentire Security Specialist now.

GET STARTEDABOUT ESENTIRE’S THREAT RESPONSE UNIT (TRU)

The eSentire Threat Response Unit (TRU) is an industry-leading threat research team committed to helping your organization become more resilient. TRU is an elite team of threat hunters and researchers that supports our 24/7 Security Operations Centers (SOCs), builds threat detection models across the eSentire XDR Cloud Platform, and works as an extension of your security team to continuously improve our Managed Detection and Response service. By providing complete visibility across your attack surface and performing global threat sweeps and proactive hypothesis-driven threat hunts augmented by original threat research, we are laser-focused on defending your organization against known and unknown threats.