CHAPTER 10

Shadow AI & Vibe Coded Applications

By now, trust has been mapped in principle and tested under strain. What remains is to see how it behaves in motion. This chapter steps inside the workflow itself—the point where good intentions meet real automation. Here, we watch how "Shadow AI" takes root: how a quick prompt becomes a team script, then a quiet dependency. It's the turning point from theory to production, and from human oversight to system behavior. To make that leap safely, the work has to move along rails—predictable tracks that keep automation from veering off course. These rails aren't exclusively software features; they're the rules, constraints, and checkpoints that let innovation run fast without running wild - like the railway for a train.

When the prototype becomes the system

I've watched a weekend demo become a system of record. A teammate mocked up a screen to prove a point — a few buttons, a prompt, some connectors. By the following Monday, people were relying on it. Nobody owned it. Nothing was logged. When it misbehaved and caused a critical error, the room went quiet. Even the person who "built" it couldn't explain how it worked — most of it had been generated by a model. The issue wasn't speed or intent. It was business dependence without visibility.

Shadow IT used to wave red flags we recognized — a rogue SaaS purchased on a corporate card, an Excel macro only one analyst could run. Shadow AI arrives in friendlier clothes: a chatbot that drafts refunds, a quick workflow that posts customer notices, a thin UI written instantly by AI that looks official because it carries a team logo and feels polished. The problem isn't speed; it's that people shift where they anchor trust — the trust that underpins the business — without realizing it. Left resting on a model, we get activity without accountability: plenty of logs, little understanding of why we can trust the new way of doing things. Anchored properly in guardrails, evidence, and named humans, the same speed becomes tractable. And while speed helps the business, unmanaged speed widens the blast radius. We don't need to slow people down; we need to move trust out of the black box and into systems and roles that can take accountability for the work.

This chapter extends that same accountability layer into the world of vibe-coded apps — software assembled by non-developers using natural language and drag-and-drop logic, often with AI quietly doing the heavy lifting. It's the next evolution of shadow systems: not malicious, just invisible to the controls that once kept trust anchored. But the real challenge isn't any single tool or workflow — it's the production line itself. We've entered a world where anyone can spin up a micro-system that touches customers, data, and brand. Here, we focus on how to secure that creative pipeline: to make the work observable, ownership explicit, and verification cheap enough to scale. The goal is simple — preserve the creative speed that makes these systems valuable, while ensuring the enterprise can trust not just the code it didn't write, but the process that keeps producing more of it.

What "vibe coding" Actually is

Vibe coding shouldn't be a slur. It's shorthand for how people now build — where a domain expert can describe a task in plain language and get a working interface in minutes. They understand the work and the outcome; they may not understand the generated code. That's not negligence; it's a structural gap in the emerging software production line. And the risk isn't in the experimentation; it's when we create unexamined dependence.

Developers often bristle at this term, and for good reason. Done badly, vibe coding produces brittle, undocumented tools that can't be maintained or trusted. But done well, it unlocks a level of iteration and alignment that traditional development cycles simply can't match. It lets experts automate their own workflows while engineers focus on the guardrails, connectors, and shared services that make that freedom safe. In other words, vibe coding isn't a rebellion against software discipline — it's what happens when the business side finally gets access to the same leverage developers have always had.

Over the past year, I've found three truths consistently hold when people start building this way:

| Truth | Why it matters |

|---|---|

| Natural language is a powerful programming interface — but a terrible system of record. | People can usually describe what they want, but not why or how you can trust it later. Left unguided, we lose the replicability, auditability, and accountability we expect as standard from software when the code in question is compiled by an AI from natural language instructions. |

| Generated code can be correct and still opaque. | The model might produce flawless results that no one can explain or modify. Even if it works reliably, without someone understanding what is happening under the hood and taking accountability for it, any unverified output isn't something you can trust or scale. |

| People will depend on anything that reliably saves them time. | Once a shortcut solves a problem, it becomes production. Policy always lags behind convenience, so governance must start at the moment dependence begins. |

If those points hold — and in practice my experience finds they do — banning vibe coding isn't a defense against risk; it's either self-inflicted blindness to Shadow AI or a competitive disadvantage created by denying non-developers access to the most powerful leveler for software production we've ever seen. The proper answer is to build a system of controls that make that speed observable, repeatable, and accountable — guardrails that are engineered and maintained by technical experts so the rest of the business can embrace vibe coding safely at full speed.

Converting Shadow AI from Risk Nightmare to AI Production Line

Many organizations already have the functional pieces of an AI development stack that lets non-developers safely produce working software solutions to their problems — they just haven't applied the discipline that we've discussed throughout this book. In most cases, these pillars weren't designed together, and most enterprises haven't combined them as part of a single security and trust system. But they're now where the real AI work actually happens — where prompts turn into workflows, workflows turn into customer-facing actions, and lastly where language can turn into software. Understanding these three layers and governing how they interconnect in your enterprise is how Shadow AI's speed can be made honest and sustainable.

Here are the components you need to select and secure within your enterprise to turn your Shadow AI nightmare into a disciplined AI Production Line; they are likely familiar to you:

| Pillars | Core Purpose | In Practice (Examples) | How It's Normally Used |

|---|---|---|---|

| An AI Prompt Workspace | The environment where people meet models. Prompts, retrieved context, and generated results become shared work once they influence others. | ChatGPT (Custom GPT Editor), Microsoft Copilot Studio, OpenWebUI | Captures low-level intent for individual AI actions. Turns prompt engineering iterations from a code practice into general, business auditable steps — a record of what is tasked to AI, by who, and why. Defines how AI decisions are shaped by non-engineers. |

| A Workflow Platform | Where durable logic lives. Fast AI-authored workflows become standardized, versioned, and policy-aware. | Tines, SOAR systems, Power Automate, Zapier, n8n | Converts AI tasks and other engineering supported software actions into reliable, business reviewable processes. Introduces typed actions, approvals, and observability so automation remains auditable without slowing iteration. |

| A Vibe Coding Platform | AI-assisted development environments where natural language produces functioning code or applications. These systems often create interfaces, integrations, or agents that run atop existing workflows and data. | Lovable, Cursor Agents, Replit Agents | Represents a new edge of software creation — where non-developers generate code without realizing they're developing. Engineers may use Cursor too, but we differentiate here as focusing on the portion of such tools that support non-technical users. |

None of this is exotic. Many enterprises already have some of these components officially sanctioned — fewer have thought about them as parts of an interconnected single production line. The discipline for a true AI production line lives at the seams between them: where a prompt becomes a process and where a workflow becomes something a customer sees.

For leaders, the first task here is awareness and recognition. Somewhere in your organization, these layers probably already exist officially or unofficially and are solving problems — an AI workspace where people experiment, a workflow platform where people string actions together, and a vibe coding platform where people are compiling software from natural language. Knowing where they are being used, how, and by who is the first step to securing them.

The next sections break down each layer in turn: how it works as part of the larger whole, where the risks appear, and the questions leaders should be asking to keep speed honest in the AI Production Line without losing control.

The AI Workspace

When to stop treating your prompts like private mail.

This is where AI work begins on the production line: wherever people in your company meet models. An AI workspace is any environment that lets an employee use natural language to get work done by prompting a model. It might look like a chat window, a prompt editor, or a customized assistant inside a productivity suite. Whether it's ChatGPT, Copilot Studio, or an internal tool built on OpenWebUI, the pattern is the same: a human describes intent, the model generates an output, and something operational in your business happens next.

In the early days, these workspaces were treated like notebooks — private places to experiment. Today they've become the front door to real business processes. The moment a given model's output is found to be useful, it will be regularly shared, sent, and repeatedly used. We need to recognize this moment as where a private workspace tool becomes an emergent shared process. AI is no longer a private sandbox; it's a part of production - it just has humans copy and pasting from other systems into it in order to keep things moving.

This shift is easy to miss, especially for security teams used to treating prompts as confidential communications — a sort of privileged conversation between an employee and a trusted digital assistant. But prompts aren't private messages; they're work instructions. They function less like an email to a colleague and more like the KB article defining a business process or a directive to an external subcontractor. When we treat them as secret rather than shared, we lose the context the business needs to understand what decisions are being made and why.

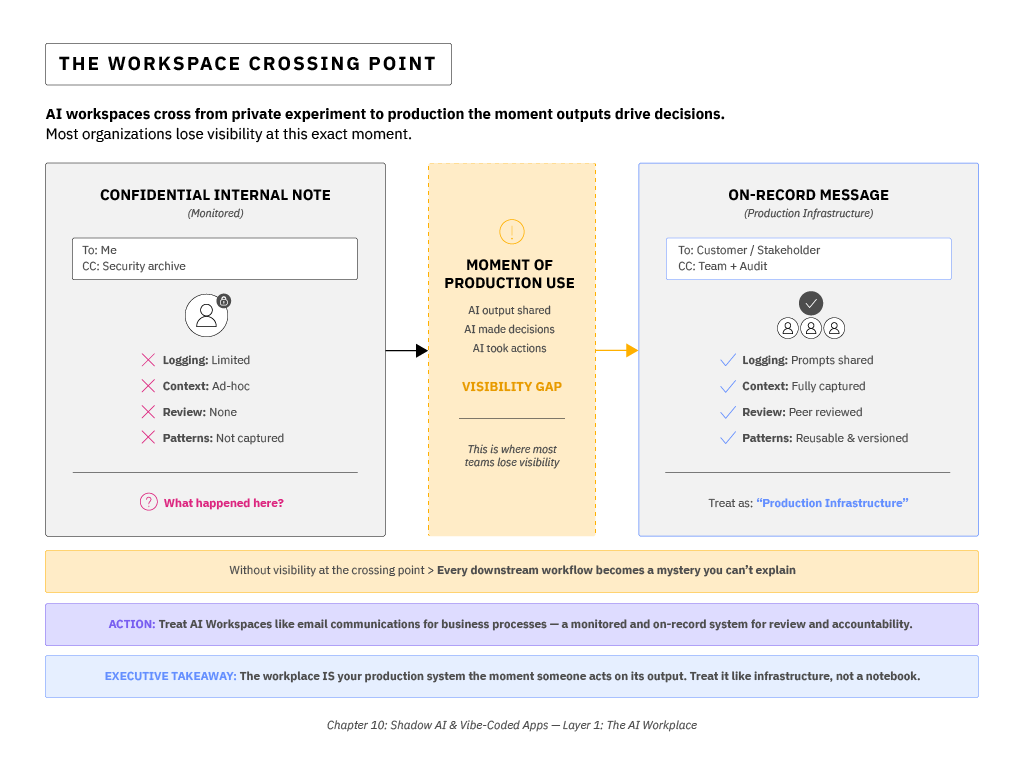

That transition — from private experiment to shared process — is where most organizations lose visibility. Once an AI workspace becomes a shared surface for action, it's the only system that records why the model produced what it did. The prompt is the instruction, the retrieval context is the evidence, and the result is the deliverable. If we lose visibility here, every downstream workflow becomes a mystery we can't explain after the fact.

The first task for leaders isn't to restrict these workspaces — it's to recognize where they already exist and how much of the enterprise's decision-making has quietly and organically moved into them. The goal is to make the intent of AI use at scale observable. Prompts, context, and outputs need to live in a shared environment where others can review and reuse them safely. This is where governance starts: not with a new policy, but with visibility into how, where, and why people are delegating the thinking, decisions, and actions of the business to AI assistants.

There are simple signals of maturity in this layer. Shared prompts instead of private ones. Persistent context that captures the data sources used. Logging that records what was asked and what was returned. These are the beginnings of accountability — a paper trail for thinking.

Promote a workspace pattern the moment you see the same prompt sequence reused daily by multiple people. When that happens, whoever is monitoring the prompts needs to recognize that it is time to promote that ad-hoc activity out of the AI Workspace to the next component - a Workflow. And, on promotion, the work runs on rails: inputs, tools, and side effects are gated and logged before anything crosses the decision perimeter. That's where AI involvement in our business logic becomes durable, versioned, and governed. But it starts here, in the conversational layer where human intent first meets machine execution.

This is where speed begins, and where trust is either captured or lost.

Promotion creates a boundary, but scale demands structure. Once multiple workflows exist, the question shifts from "what should this one do?" to "how do we control all of them?" That's where the platform comes in. Its job isn't to add more intelligence—it's to make sure every intelligent process runs on enforceable rails.

The Workflow Platform

By this point, prototypes are already in motion. The only way to keep speed without inviting chaos is to run the work on rails—predictable tracks that constrain inputs, tool access, and side effects. If a step leaves the rails, it stops. The boundary those outputs must cross to affect customers or systems is the decision perimeter. Everything that crosses it needs proof: who ran it, on what data, with what tools, and what changed.

Access to vibe coding platforms makes it tempting to wire prompt calls from the AI Workspace directly into production via traditional software. That path looks efficient until something breaks and there's no contract boundary the non-developers who produced the tool can inspect.

The definition of "non-developer task automation" rightly belongs on a workflow platform given clear ownership and support. You can buy it (n8n or an enterprise peer) or build it, but you cannot pretend that it maintains itself. A team of engineers owns the bricks and the runtime; but once the boundary is defined then business teams can safely own the logic they assemble from those bricks.

A serviceable automation platform has:

| Capability | Minimum bar | Why it matters |

|---|---|---|

| Versioned blocks | Named, versioned actions with change notes | Enables rollback and reasoning |

| Typed connectors | Inputs/outputs validated and documented | Protects downstream systems |

| Sandbox runs | Fixtures and test environments | Catches surprises before they matter |

| Policy gates | Role/risk/data-scope checks at execution | Keeps speed inside bounds |

| Observability | Step-level logs tied to prompts and outputs | Builds the end-to-end story |

| SLOs | Uptime/latency/error budgets | Treats the rail like a product, not a script pile. By rail I mean an enforceable track for automation: it constrains inputs, tool access, and side effects, and stops execution if a step leaves the track. |

Buy vs. build is a time-to-safety decision. Buy when a platform gives you enforceable rails and visibility at the decision perimeter faster than you can staff it. Build when automation is core to differentiation and you own the people to maintain the rails. Either way, Layer 2 is where speed becomes explainable; put automations on a rail you can support.

The Vibe Coding Platform

What these platforms allow people to do is simple and powerful: express a business outcome in plain language and get a working interface almost instantly that appears to deliver it. A sales leader asks for pipeline heat and gets a sortable board with drilldowns. Operations asks for "exceptions in the last 24 hours" and gets a table with buttons that fire off actions. Legal asks for a "policy gap view" and gets a checklist linked to source passages. None of it requires an Integrated Development Environment (IDE). The platform infers structure from language, wires tools to data, sketches a layout, and remembers just enough of yesterday's context to feel coherent today.

Because they compress intent into interface, vibe-coded tools are extraordinary for discovery. They let non-engineers explore the shape of an idea quickly—not as a document or a ticket, but as something you can click. In the past, this level of validation required a sprint and a sympathetic developer. Now it takes an hour and a few prompts.

You'll recognize these systems by what they enable and how brittle they are once you look under the hood. The thing works—until someone else runs it from a different context, or the model adds a small update and breaks everything, or you find buttons that go nowhere and do nothing. When you see an impressive demo that no one can run twice the same way without the original author in the room, you might be looking at a vibe-coded system.

Used wrong, the pitfalls of vibe coding are predictable:

| Risk | What It Looks Like in Practice |

|---|---|

| Unstable logic | The system appears consistent but radically changes behavior as new requests come in for updates — decisions can't be replayed or defended. |

| Hidden wiring | Behind every demo sits improvised connections between tools and data — no documentation, no review, and no single map of what depends on what. No one knows how it works. No one can describe exactly what is happening when a button is hit. |

| No accountable owner | Vibe-coded apps often run from a hosted account; when the person that built them moves on, the logic and history of the tool disappear with them. |

| Uncontrolled access | Early prototypes run with personal credentials or broad tokens, exposing sensitive systems to untracked, over-privileged use. |

| False finish | A polished interface creates the illusion of a deployed capability, when it's really a prototype that can't survive change or audit. |

None of this makes the layer a mistake. It makes it a design surface. In isolation, that is its legitimate purpose. Treat vibe-coded apps as you would a high-fidelity Figma: a fast, shared way to converge on what good looks like—data in view where judgment is made, the right controls at the right step, the right evidence under the cursor. Judge them on clarity, not durability; on whether the interaction makes sense, not whether the result will hold when conditions change. If a team can't make the case on this surface, there's no reason to invest further. If they can, you've earned the right to turn that shape into something the enterprise can stand behind.

Examples make this concrete. A revenue team prototypes a renewal-risk cockpit that surfaces verifiable signals next to each account note. A security-operations lead mocks up a "human-in-the-loop" triage panel that shows the model's recommendation and the sources it relied on, with an explicit approve/escalate path. A policy owner drafts a rules explorer that lets auditors click from a control to the underlying evidence. In each case, the vibe-coded tool proves the interface and the information architecture. It lets stakeholders argue about what should be visible and when—without burning engineering cycles. That's the victory here: alignment at human speed.

Resist the urge to treat raw vibe coding platform outputs as software. From a trust perspective, these creations haven't earned that name. They're working drafts—valuable precisely because they are easy to change, and totally unfit to power anything that matters. When teams start relying on them for production decisions, the symptoms show up quickly: unexplainable divergence after a harmless update; policy logic that moved because a sentence did; outcomes no one can replay with the same evidence. That's what happens when you mistake a prop for reality — it looks perfect right up until you hit it like Wile E. Coyote and realize the tunnel you were aiming for was only painted on the wall.

Assembling the AI Production Line

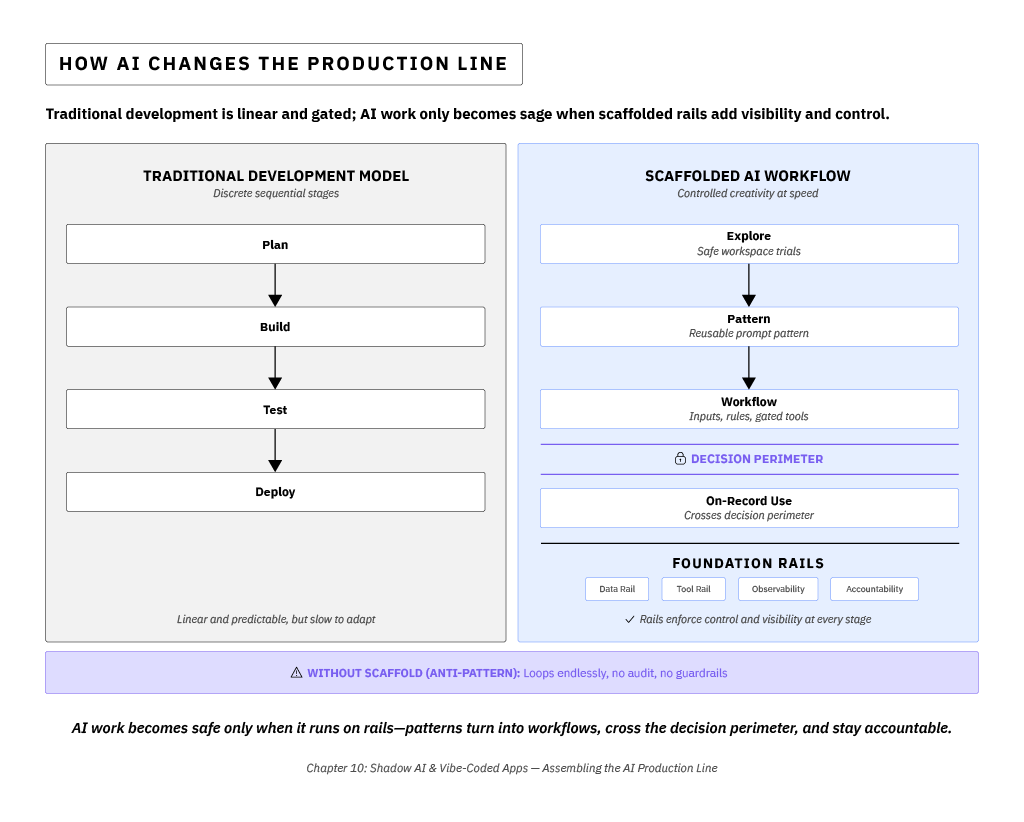

Once individual rails exist, the next step is linking them into a continuous path—a production line for AI work. It looks familiar to software engineers, but the sequence changes once prompts and data share the same pipeline.

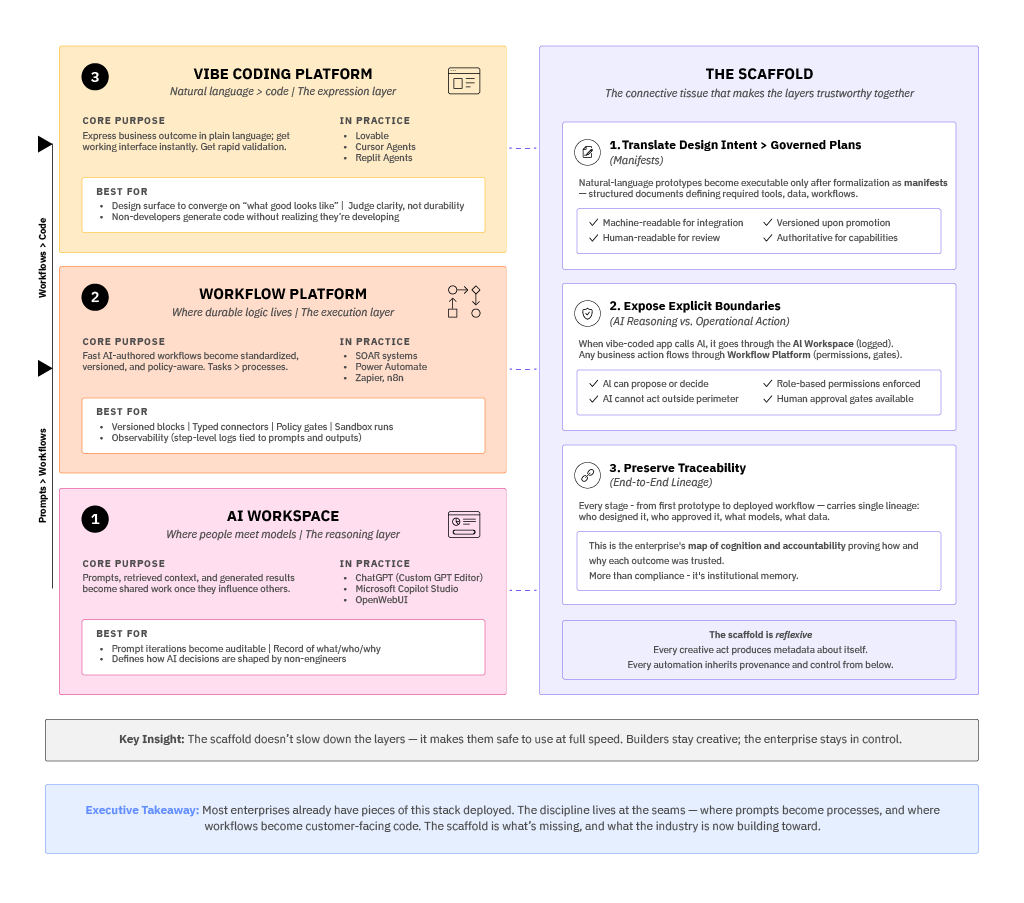

When you step back from the layers, their interdependence becomes obvious. The Vibe Coding Platform provides expression—a way for people to sketch how work should look and feel. The Workflow Platform provides execution—repeatable motion that can be verified and audited. The AI Workspace provides reasoning—the prompts, models, and evidence that guide intelligent behavior. But none of them can stand alone. Each layer protects a different dimension of trust, and without a shared frame to hold them together, that trust scatters.

What the enterprise still lacks—and what the industry is now learning to build—is the scaffold that joins them: a connective layer that turns experiments into systems of record. It binds creative design to accountable execution, linking every model call, workflow, and human decision into a single traceable chain. The scaffold supplies the rails across layers, so behavior stays within bounds as prototypes harden into systems. In a world where logic can rewrite itself overnight, the scaffold becomes the enterprise's memory—proof that what ran yesterday can be understood tomorrow.

Read the figure above left to right: each step builds toward on-record use, where rails validate data, gate tools, and make every action visible at the decision perimeter.

The rails shown above aren't abstractions—they're the structural logic of a trustworthy AI workflow. Each one converts what would otherwise be a fragile prompt into a governed process: data is validated, tools are gated, outcomes are logged, and the decision perimeter becomes enforceable. What follows unpacks that scaffold layer by layer, showing how those rails form the minimum viable infrastructure for safe automation.

This connecting layer doesn't yet exist as a single product, but we can already see its contours. It needs to do three things exceptionally well:

| System Requirement | Purpose and Function |

|---|---|

| Translate design intent into governed plans | Natural-language prototypes from the Vibe Coding Platform become executable only after being formalized as manifests — structured documents that define required tools, data, and workflows. These manifests must be machine-readable for integration and human-readable for review, serving as living contracts: editable during design, versioned upon promotion, and authoritative for what the system can do. |

| Expose explicit boundaries between AI reasoning and operational action | When a vibe-coded app calls an AI function, it does so through the AI Workspace, where prompts, context, and model selection are logged and reviewable. Any resulting business action flows through the Workflow Platform, which applies role-based permissions, policy checks, and human approval gates. The connective layer orchestrates these calls so the AI can propose or decide — but can never act outside its governed perimeter. |

| Preserve traceability across creative and operational states | Every stage — from first prototype to deployed workflow — carries a single, end-to-end lineage: who designed it, who approved it, what models were involved, what data they touched, and which guardrails fired. This is more than compliance; it is the enterprise's map of cognition and accountability, proving how and why each outcome was trusted. |

Taken together, these functions sketch out a control plane for intelligent work. I haven't seen a perfect implementation from a single product or vendor yet, but the direction is clear. The future enterprise stack to enable non-developers to operate an AI production line will need an orchestration layer that can lift ideas from the Vibe Coding Platform, refine atomic AI tasks in an AI Workspace, formalize chains of them in Workflows, and consistently return a result that's both explainable and safe to depend on.

How Builders Actually Use It

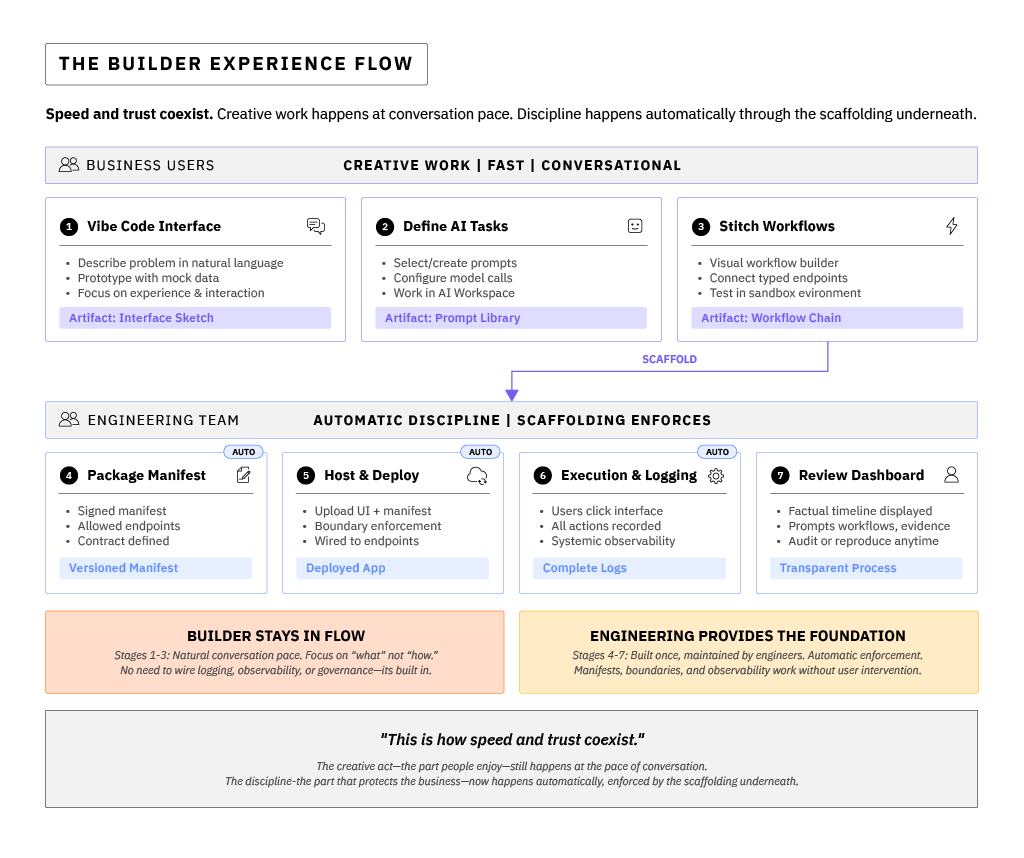

Inside a properly assembled AI Production Line, building an AI app doesn't feel like compliance—it feels like working in a well-lit workshop. The same flows and tooling that once produced hidden wiring now produces visibility and repeatability by design.

The experience starts with people describing their problem and vibe coding an interface. Builders can prototype in natural language using mock data and placeholders until the interaction feels right. The focus is on experience and problem definition, not execution—on getting the shape of the idea to make sense to real people. This is where the Vibe Coding Platform does its best work: fast design, human feedback, and visible alignment before any live data moves.

Once the design holds together, the builder defines or selects tasks for AI to execute in the AI workspace and then stitches them together visually in the Workflow Pattern. That package is the contract with reality: a bundle of approved endpoints that trigger inspectable work, resting on platforms engineers can support. Each endpoint is typed and described— exported as tools with clear input and output schemas—and wrapped in a signed manifest describing what the Vibe Coding Platform can hit when it goes live. This isn't a formality; it's the definition of what "safe to ship" means, and it allows the Vibe Coding Platform (with engineering platform support) to consistently produce functional software with user-inspectable logic.

Next comes hosting. The builder uploads the UI and its manifest to the host space. From that moment on, the app is only able to call what the manifest allows. The host automatically wires the front end to those sanctioned endpoints—no custom glue code, no shadow integrations. The result is creativity with boundaries: the builder defines the experience, the platform and engineering teams define the perimeter, the AI writes the code for the interface.

Then comes execution and logging. As users click through the interface, the hosting environment silently records every back-end action: which MCP tool or model the app invoked, which workflow blocks executed, what inputs were passed, and what outputs came back. None of this depends on a developer remembering to log events— observability is systemic.

Finally, the builder opens a review dashboard. Instead of a model narrative or an unverifiable trace, the platform renders a factual timeline: prompts, workflows, evidence, and results, all tied to versioned endpoints and manifests. Builders don't wire this review path; the host provides it automatically. What used to be "a working demo only its author understood" is suddenly a transparent process any reviewer can audit or reproduce.

This is how speed and trust coexist. The creative act—the part people enjoy—still happens at the pace of conversation. The discipline—the part that protects the business—now happens automatically, enforced by the scaffolding underneath. The builder still gets to build; the enterprise finally gets to understand what it built.

How Review Works in Practice

When someone presses a button, the platform captures: the current manifest, the model call (with retrieval context), the tool invocations, the workflow steps, policy gates, and the side effects.

| Component | What it answers |

|---|---|

| Event timeline | What the user clicked and when |

| Model context | Prompt and retrieval set the model saw |

| MCP tools | Which tools were invoked with which typed inputs |

| Workflow steps | Which workflow platform blocks executed, with inputs/outputs |

| Policy gates | Which rules evaluated; pass/fail; who approved |

| State diffs | Before/after for touched records |

| Cost & latency | Tokens/compute by step; timing per leg |

| Anomalies | Injection flags, drift indicators, retries |

When this is implemented well, builders can immediately see how their vibe coded UI invokes their defined AI model + workflows in real time, even though they don't know code. Security teams can audit to reveal risks, insert breakpoints, or require new guardrails as the app grows critical; and engineering can trace action level faults back to platform components with named owners.

Practical Example: A Sales Dashboard

A salesperson vibe-codes a small dashboard to help them stay organized in the field: it sorts leads, flags priority, and schedules follow-ups. The tool is successful; it's organically and quietly adopted across the sales organization.

Later, security notices score distributions drifting for a campaign. They add a breakpoint: runs with that pattern should require additional evidence. Engineering adjusts features in the scoring workflow. The seller keeps clicking the same button. The system shows its work, and the business retains trust in its sales process.

Review: Securing Your AI Production Line

Each control that follows is an echo of work we've already done. These aren't new doctrines; they're just the condensed lessons of the road behind us. When we said in Chapter 7 that trust must rest on something outside the model, the rules outlined in the table below are examples of that "something" made concrete.

When we mapped decision perimeters and risk tiers in Chapter 8, this is where those boundaries become enforceable form. When we framed accountability in Chapter 9 as a traceable chain between people and systems, these are the weld points that hold that chain together. Even the supply-chain cautions from Chapter 6 and the poisoned-prompt failures from Chapter 5 live underneath them—reminders that vigilance must now be structural, not episodic.

Taken together, the tables below are less a checklist than a distillation: the reflexes of a governed enterprise, where trust, evidence, and velocity can finally coexist.

| Control | Practical rule |

|---|---|

| Per-app identity | Each AI Production Line app has its own OAuth client/service identity; no shared tokens |

| Human-on-behalf-of | Actions run as the user with least privilege; audit trails bind both app and human |

| Allow-lists | Manifests restrict models/tools/workflows; platform enforces at runtime |

| Typed params | Tools and workflows validate inputs before side effects |

| Rate limits | Per-app and per-user throttles; circuit breakers on cost anomalies |

| Signed manifests | Changes require review; platform keeps history and diff |

| Sandboxes | Throwaways run in a fenced workspace; promotion flips to governed wrapper |

Adding Validation Flows

Control rules alone don't secure a system; they steady it long enough for learning to take hold. The next step is securing the operational AI work — adding validation flows that keep those rules alive in practice. In deterministic software, we used tests to prove code behaved as expected; in AI systems, we must inject validation to prove behavior remains aligned as the world shifts around it. Every escalation, every review, every human check added to apps emerging off the AI production line become part of a living feedback loop — the same loop we first imagined back in Chapter 4, when we watched model behavior drift in the absence of oversight. Now, that vigilance is built in. The enterprise doesn't just react to failure anymore; it remembers, re-evaluates, and adjusts in real time before decisions turn to actions. This is what it means for trust to be operational — trust not just audited once; but something that's continuously renewed and attached to prove each output.

| Validation theme | How Our Scaffold Delivers It Systemically |

|---|---|

| Prompt injection & poisoned inputs | Build behavior baselines, trigger anomaly flags on the model-context panel; block risky workflows pre-validation |

| Retrieval drift | Compares retrieval manifests across runs; alert on shifts |

| Decision-risk tiers | Allows builders and security teams to require review ladders enforced per tool/workflow; sampling rates visible |

| "Cheaper than redo" review | Evidence panels let reviewers verify without re-creating work and track costs. |

What the above table really shows is how risk tiering now lives inside the system, not beside it. Every validation flow already maps to a decision ladder — from quiet sampling in low-risk work to real-time human confirmation when stakes climb. We built those tiers back in Chapter 8 to decide who should be in the loop; now the scaffold lets us enforce them without breaking motion. The result is a rhythm that balances automation with judgment: lightweight review where confidence is high, deep inspection where impact is real. It's not new governance — it's governance remembering what it learned. Validation and risk have finally merged into the same circuit.

Adding Decision Risk and Review Ladders

Every modern enterprise experimenting with AI is already standing on the edge of this design problem. The pieces exist—review tiers, approval flows, risk scoring—but they live in separate tools and teams. What's missing is a shared rhythm that ties them together. Executives probably won't get that rhythm from a vendor anytime soon; it has to be built deliberately into the organization's operating model. The decision-risk and review ladders are how you decide what that discipline looks like in practice in your organization: proportional oversight that moves at the speed of automation without surrendering control. If you lead an AI initiative today, your real task isn't to slow innovation—it's to ensure every tier of risk in your AI production line has an accountable owner and a visible evidence path. Build that, and you're not just experimenting with AI—you're shaping the system that will let your business trust it at scale.

| Risk tier | Example actions | Required control | Evidence to surface |

|---|---|---|---|

| Low | Draft an email; summarize case notes; file an internal ticket | Post-facto sampling (e.g., 10–20%) | Prompt, retrieval sources, action summary |

| Medium | Update CRM fields; adjust entitlements; trigger refunds under threshold | In-app human confirmation | Before/after record + approver ID |

| High | Change policy; move money; broad customer comms; modify access | Dual approval + policy checks | Full trace + reversal path |

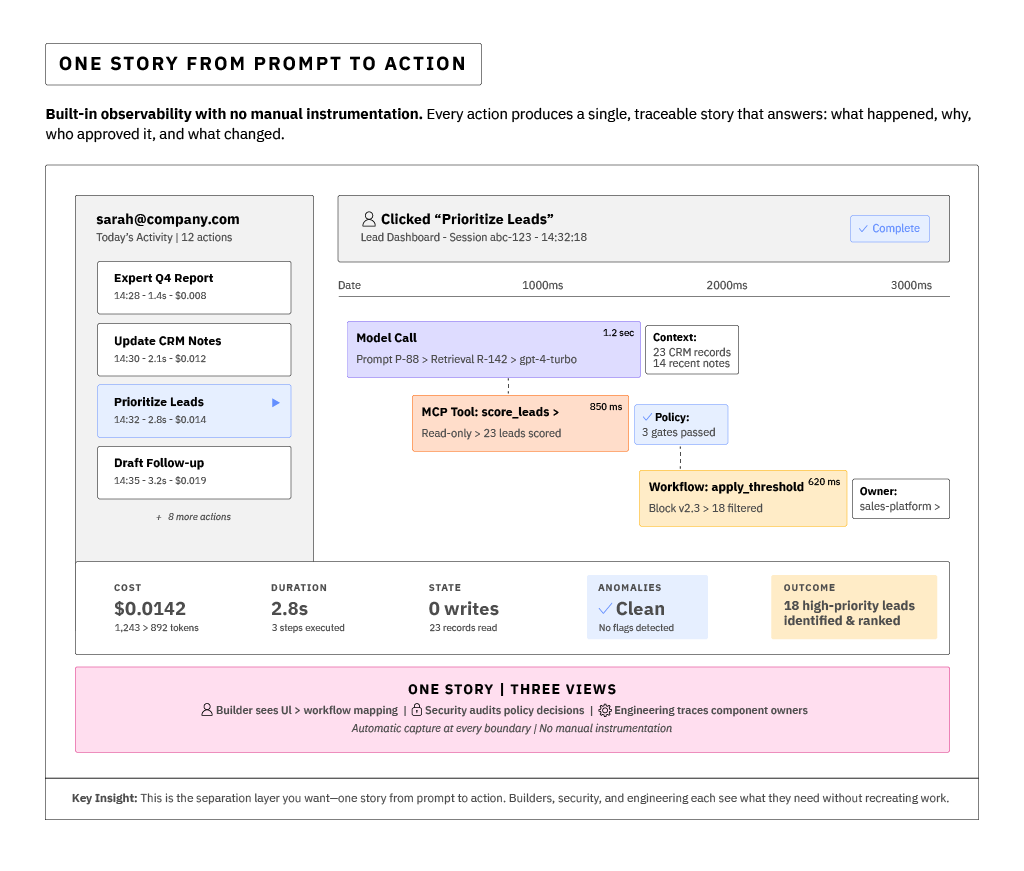

Focus on Observability: Demand one story from prompt to action

When something misbehaves, you want a single timeline: the prompt and retrieval context, the model output, the Model Context Protocol (MCP) tool invocations, the workflow steps, the approvals, and the downstream effects. That story shortens time to reason and time to repair. Here's what that can look like:

The AI Production Line isn't fully real yet, but it isn't just a concept on paper either. In my team's current lab work, we've already begun exploring early prototypes of the AI Production Line and the methodologies discussed in this book in practice. So far, we are testing small POC systems that connect prompts, workflows, and human oversight through shared evidence and policy gates. The results so far are promising: trust becomes visible, review becomes faster, and the work itself grows easier to reason about. These are still experiments, but they're experiments grounded in practice. We're still learning what holds up, what breaks, and what it really takes to make this architecture real. And as those lessons continue to unfold, I look forward to sharing more.

Conclusion

When I started writing this book, I thought it would be about AI. It turned out to be about leadership. About the responsibility that comes with seeing a technology change the boundaries of what people can do — and realizing that we get to choose how that change unfolds.

From the beginning, I said there were two truths we would have to hold at once: that AI is now essential to staying competitive, and that it introduces a new class of risks capable of utterly destroying the trust enterprises depend on. Those truths haven't changed. What has changed, at least for me, is how I see the work in front of us. The goal isn't to master the technology — it's to design the environment where it can be used responsibly, where speed and judgment can finally coexist.

We've spent these chapters tracing how that can happen. We examined how trust migrates — from people, to systems, to the structures that govern them — and how to keep that trust visible. We walked through attacks, guardrails, control planes, and validation loops, not because I think every executive needs to understand the mechanics, but because you can't lead what you can't picture. You have to be able to see what accountable AI work looks like before you can demand it.

And if there's one picture that stays with me, it's that of the future AI production line. A place where creativity, automation, and accountability move in rhythm — where the model is just one station in a longer flow. Some pieces are currently theoretical, yes, but the principles behind it aren't. We already know how to design for safety without suffocating innovation. We already know how to measure quality, and how to create evidence that travels with work instead of slowing it down. What's new is that we now need to apply those instincts and governance to reasoning itself — to the previously invisible but now essential work of thinking critically — before we can properly leverage AI-generated outputs.

That's the decision in front of us: to treat AI not as a clever assistant, but as part of the enterprise's memory and judgment. Because as models start making decisions, they aren't just executing logic; they're shaping how your organization evolves. The leaders who will succeed in this era are the ones who see such governance not as a tax on progress, but as the cost of confidence — who understand that verification isn't bureaucracy, it's emerging as value and brand protection.

I don't believe the future of work is about machines replacing people. I think it's about people learning to work through machines — to delegate without disappearing. Our task as leaders isn't to slow down innovation; it's to make it legible. To build systems that let us remember and audit why each decision was made, not just what the output was. To ensure that every act of AI automation leaves a trace of human intent behind it.

The companies that figure this out will move faster than anyone else, because they'll trust less blindly and be secure with their verifications. Their teams will be confident enough to experiment, and their customers will be able to stay confident in the results. That's what good governance really buys you: permission to move.

I've spent most of my career in security, trying to make sense of the ways technology breaks trust. Writing this, I realized that our job is no longer just to defend systems — it's to design them to be worthy of trust in the first place. We can't stop AI from changing how work gets done, but we can decide what kind of work emerges as the old work is made obsolete.

If we do this well, AI in the enterprise won't be remembered as the moment we built a machine that ended our reliance on human labour. It'll be remembered as the moment we learned how to build systems that let us trust AI — when our reasons for trusting became visible, we learned how to take accountability for AI outputs, and AI's leap forward for humanity finally felt like something we could all stand behind.

So, as you close this book, I'll leave you with a simple question: what kind of future do you want to build?