CHAPTER 8

The Architecture of Control

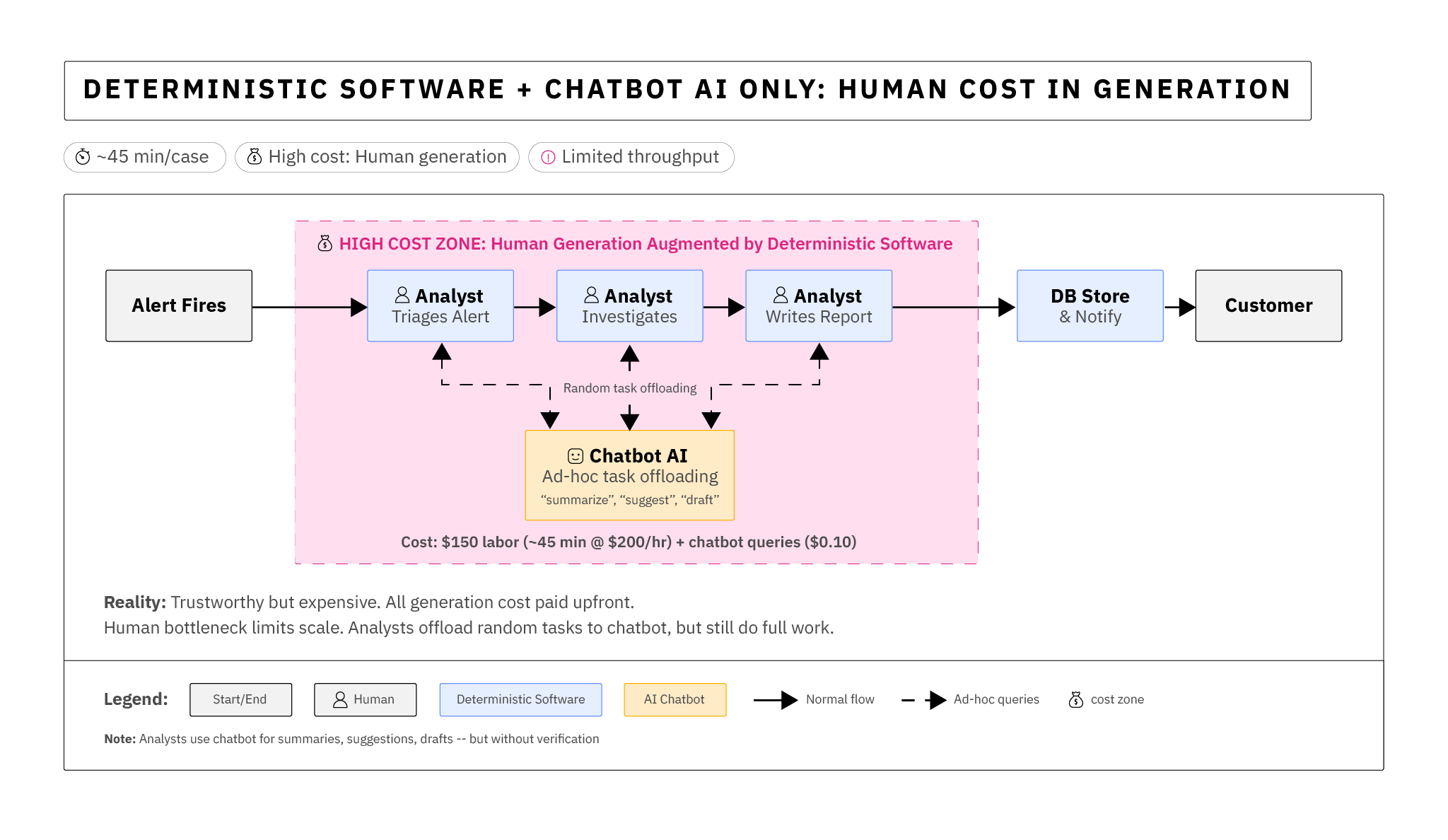

The first time I saw a Security Operations Centre (SOC) leverage a general-purpose chatbot in the investigation workflow, it felt like a breakthrough. Analysts could ask anything — "summarize this alert," "suggest an investigation plan," "draft a customer update" — and the system responded with crisp, confident prose. Analysts didn't deploy AI so much as offload tasks to it whenever the moment felt right.[9]

Then, what should now be a familiar anti-pattern emerged. Blind confidence from some analysts in plausible-sounding AI answers where certainty was required. A junior analyst copied and trusted AI's evaluation of a command line without verifying it; a customer escalation followed.

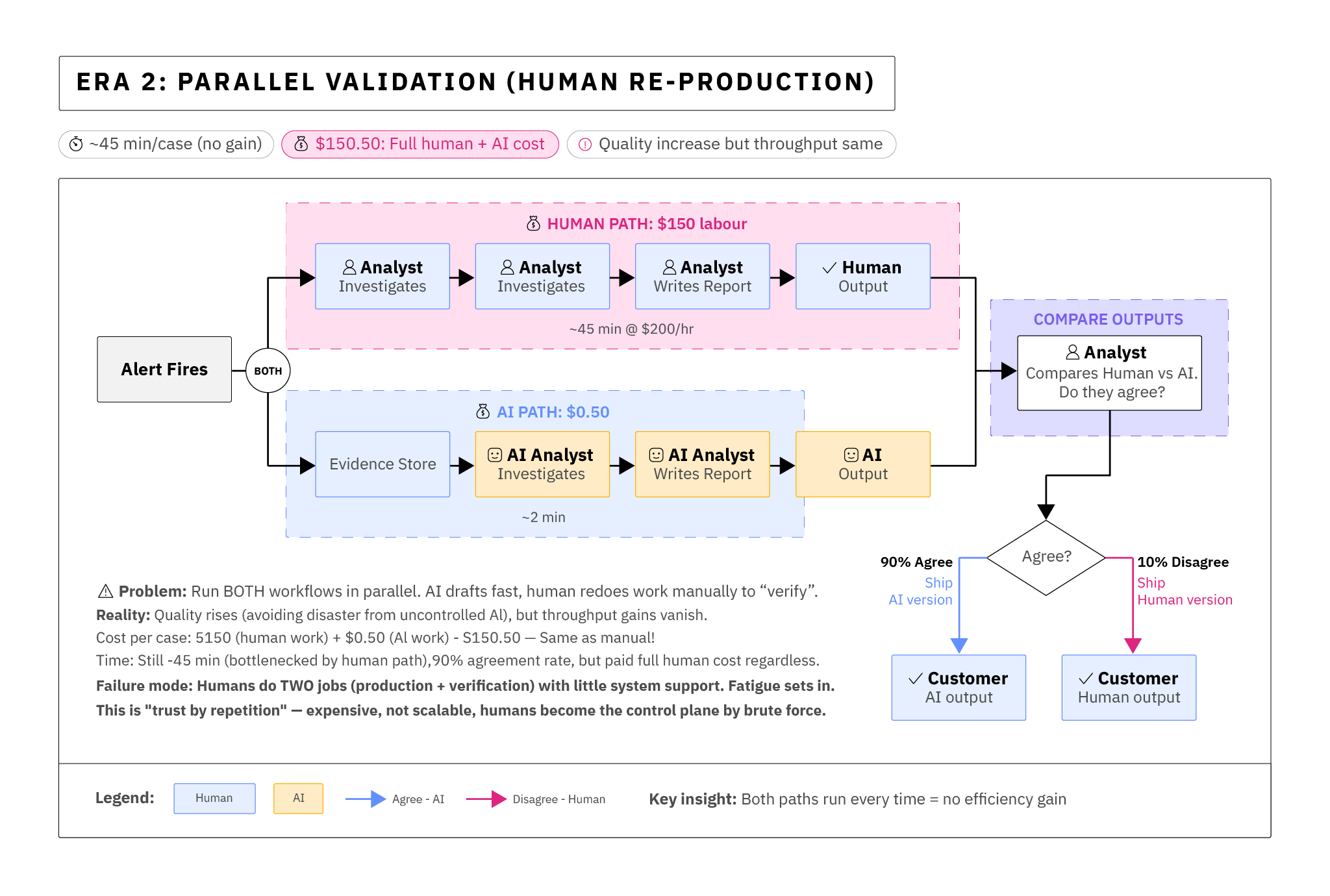

In another iteration, we built a bigger system with hard-coded prompt flows and added lots of embedded prompts to the AI to follow various additional directions to try and increase reliability where output was unreliable. It was a new system entirely, but we lacked verification tools. We forced analysts to independently reproduce every AI-assisted step to "verify" each case. Output quality improved dramatically on average — models write a lot, quickly, and it's good writing overall — but throughput didn't improve because humans were still stuck doing the whole job.

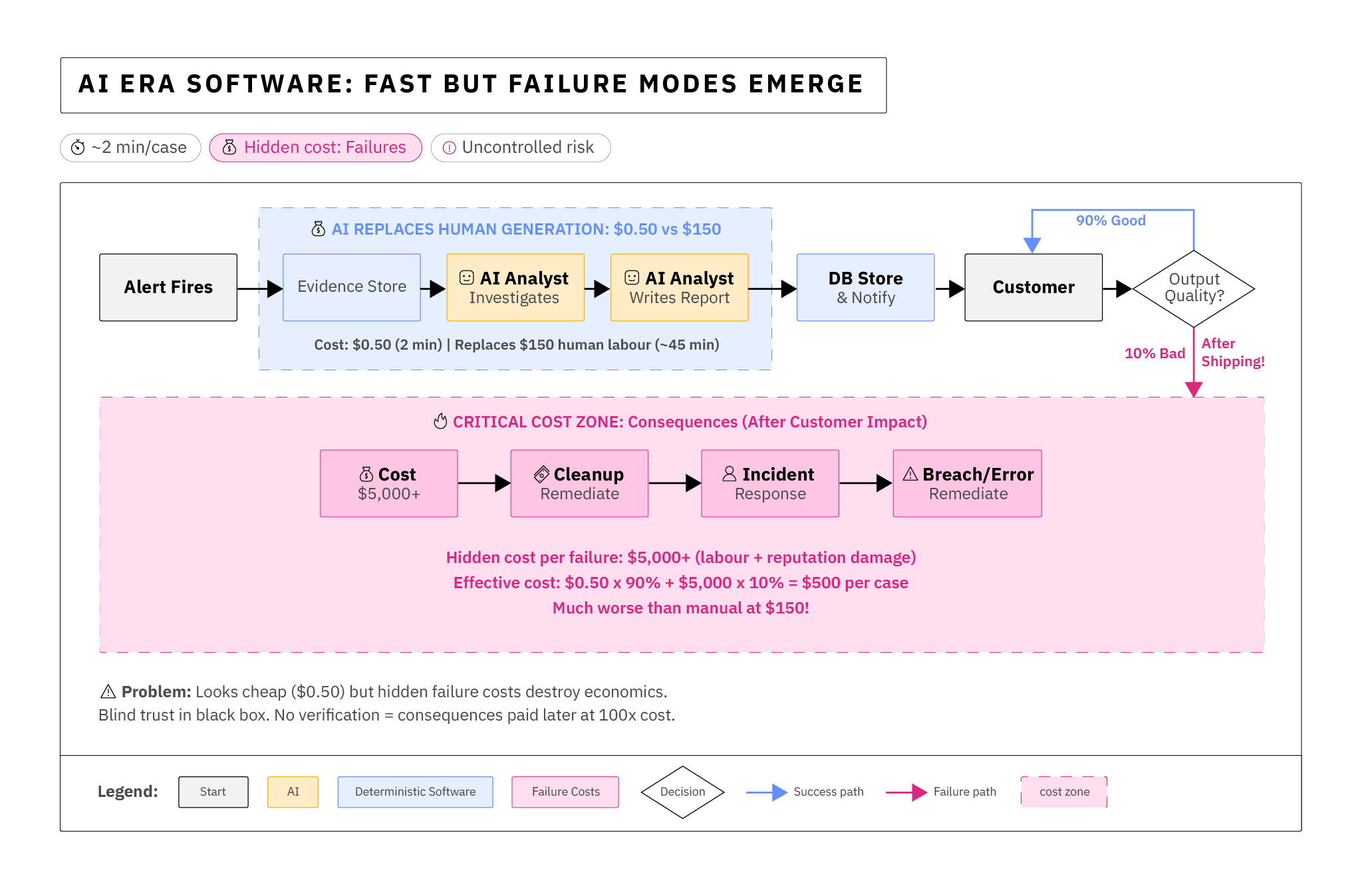

Some engineers wanted us to move towards allowing the AI to operate autonomously (without human oversight), to improve operational efficiency while persuading customers to trust the system — pointing to the high rates of agreement achieved under normal working conditions. Analysts warned of consequences and kept dropping individual examples where the AI was wrong in uncorrectable ways. Executives were left trying to decide between either accepting the high costs (and old error rates) of the old human system of work or accepting a new AI system of doing the work (which had low error rates but had also never faced a direct adversarial attack on the AI).

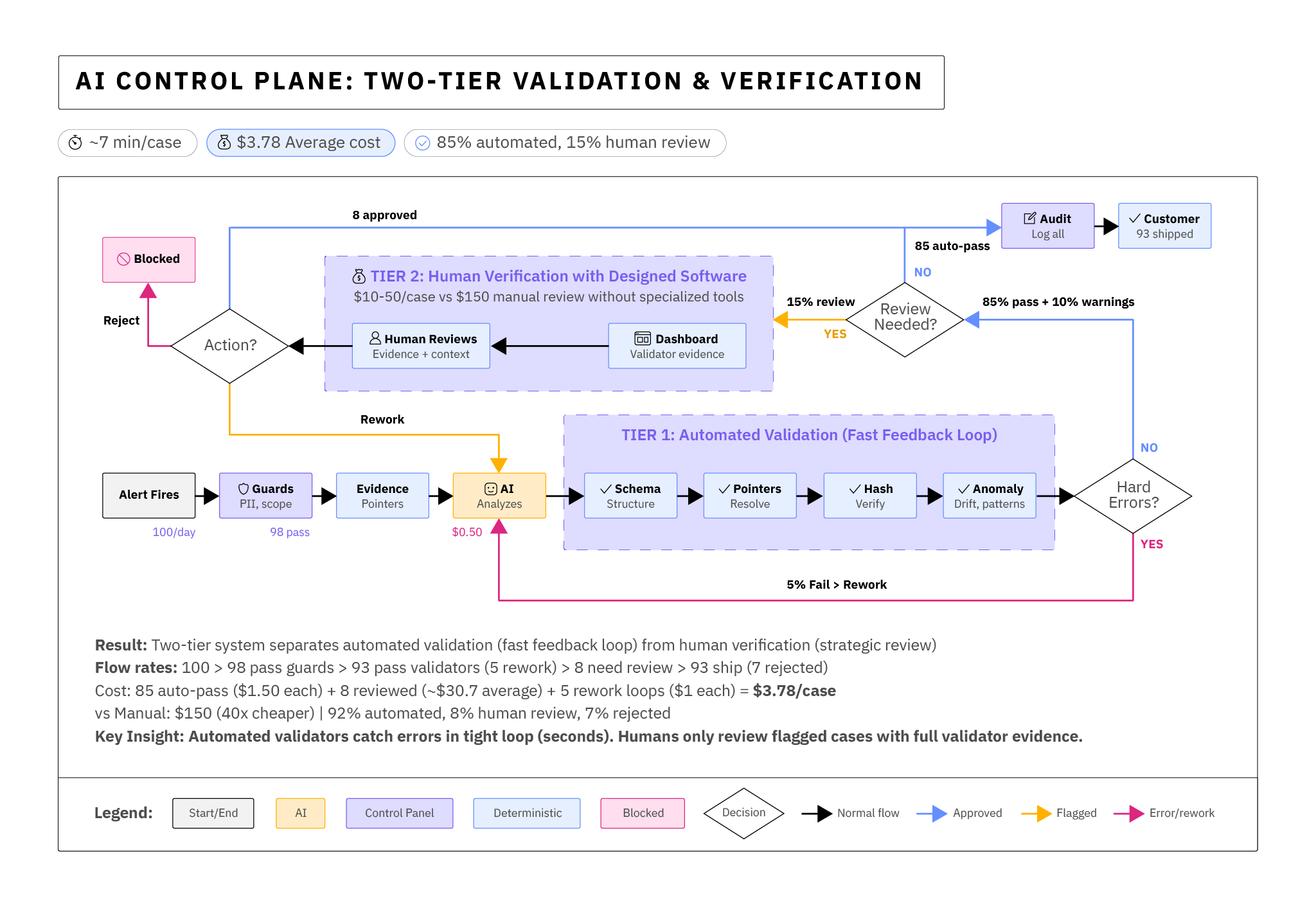

The third iteration we developed is where this chapter lives. We stopped asking, "How do we make the model better?" and started asking, "Where does trust really belong in the overall system of software, AI models, and experts?" The answer was not to leave trust in the generated outputs inside the black box of a model. It was to design a new control plane surrounding it — the fusion of governance and technical systems to make reasons for trust visible, verifiable, and enforceable at scale. Governance defines where and why customers need to purchase that oversight; technical systems let us deliver that oversight (or the tools that support humans to deliver it) with consistency and speed. When done well, humans are now supported by powerful tooling for rapid verification — they are not left to repeat the work the old way.

This is the chapter where we lay out in concrete terms what works and what doesn't in AI control systems. Here we map what a control plane is (and isn't), walk through real workflows in SOC operations, draw parallels to healthcare and legal, show how it simplifies lighter-risk domains like marketing, and hands you artifacts — stub policies, escalation flows, dashboard views — that you can adapt. The goal is simple: bring trust back into the system without sacrificing the gains AI already delivered.

What an AI control plane is — and why you need one

An AI control plane is essentially the monitoring system you build to allow you to trust the outputs of an agentic or embedded AI software system. It is not a dashboard; it is not a PDF policy. It is a governance-plus-technical system designed to:

| Function | Description |

|---|---|

| Anchor trust outside the black box | Move trust in final outputs off the AI models by grounding decisions in immutable evidence, deterministic checks, and accountable human judgment. |

| Surface observability | Show what data was used, which tools were called, and what policies gated behavior—so trust can be inspected, not assumed. |

| Gate sensitive actions | Prevent high-risk outputs from reaching customers unless the required verification and approval checks have passed. |

| Escalate uncertainty intelligently | Route ambiguous cases to the right human, with full context and clear accountability for the decision. |

| Audit every step | Maintain transparent records for regulators, customers, and internal quality programs to prove governance in action. |

If Chapter 7 established the philosophy — AI outputs the work, guardrails and humans provide the trust — this chapter's discussion of the control plane provides practical examples of machinery we must build to make that philosophy operational and economical. Ultimately, we are talking about a new type of tool and workflow that never needed to exist before AI systems changed software, and it addresses the inherent unpredictability, unique threat surfaces, and black-box nature of generated outputs from AI-enabled software systems.

Where it lives (and what it touches)

Start thinking of all AI-enabled software systems as consisting of three distinct layers:

| Layer | Description |

|---|---|

| Deterministic Software | Traditional software that earns trust through inspectability of source code and deterministic predictability—its outputs are consistent, testable, and repeatable. The same inputs always produce the same results, making reliability intrinsic to its design. |

| AI-Era Software | Classical software now infused with AI. Specifically, embedded models or agentic behaviors incorporated into the flow as opposed to chatbot AI. Adding AI uplevels capability but introduces non-deterministic behavior and runtime uncertainty: the same input to such software produces different outputs each time. All legacy security and assurance models assume determinism—AI-era software breaks that assumption. |

| AI Control Plane | A new layer of deterministic software built around AI-era non-deterministic systems to restore trust. It includes policy engines, validators, escalation and review services, observability and logging, and drift and risk monitors. This layer produces artifacts—dashboards, audit reports, customer-facing proofs—that reallocate trust from opaque models and places it clearly on verifiable, governed systems. |

Your control plane must wrap each embedded model and bring trust back to every non-deterministic AI output and action that carries risk. It doesn't replace intelligence; it orchestrates it — ultimately, it's a system for deciding what actions and outputs from the AI should be rubber stamped, which must be checked, what must be explained, and when humans are invoked to take accountability for edge cases.

Decisive idea: Trust is never free, and generation alone has no market value without it. To adopt AI output in any situation where someone will pay for an output, we must think about how to architect systems so that scaled validation and accountability for the AI output is cheaper than the old ways of human output production — for both customers evaluating output and for our internal reviewers. That's ultimately how AI successfully adds value without compounding risk.

SOC Operations: From "Offloading Trust" to "Operating a Control Plane"

Now let's make it tangible. Below is the same workflow taken across three eras.

Era 1 — Ad hoc Assistance (trust offloaded at random)

In the first era, AI assistance was completely optional in the production flow. The analyst was given the ability to interrogate an AI for investigation or triage assistance, or delegate tasks, by directing questions to a chatbot that was trained and set for internal use.

The architectural problem with this approach is that we have trust offloaded at random, and we don't see where the human is degrading by accepting AI input. In the actual production diagram, all we see is an accountable human following normal processes. A long-term study of this done in partnership with CSIRO showed gradual acceptance by analysts for task offloading.[10]

Era 2 — Full AI SOC Automation (with trust achieved by repetition)

In the first implementation of Era 2: Full AI SOC Automation, an error by the AI would have landed on the customer's desk—an unacceptable failure mode that pushed both the consequence and the cleanup beyond our control. As a result, Era 2 in practice ended up looking more like this in our field testing:

In Era 2, we still saw enormous gains in terms of quality of output - a human and an AI would both perform a case and write a report - but the human report was typically far sparser. When it worked, the AI was often able to produce a higher quality and more complete deliverable to support the same conclusion the analyst came to - but the promised cost reductions for the work never materialized. The bill went up - we were paying an AI and a human to do the entire workflow.

Era 3 — A Proper Control Plane (trust supported, visible, and enforceable)

The control plane that was eventually implemented rethought the role of what the analysts should be doing and built tools to account for that. We decided that trust and accountability demanded analysts, but efficiency and economics demanded that they be given new tools and a new focus to the work.

A Practical Checklist for Control Plane Components

| Component | What it does | Why it matters | Executive ask |

|---|---|---|---|

| Policy Engine | Codifies what must be checked/gated based on risk, data class, and action | Enforcement in the system, not on paper | "Show me where policies execute — not just where they're written." |

| Evidence Store | Read-only repository for logs/artifacts with content hashes and timestamps | Prevents model-authored evidence; anchors truth | "Prove this artifact existed at time T and hasn't changed." |

| Deterministic Validators | Non-AI checks for structure, scope, support, signatures | Catches obvious failures quickly and cheaply | "Which checks run automatically before a human sees it?" |

| Escalation Service | Routes yellows/reds to the right human with the right context | Puts people where they add value | "What's the mean time to make a human decision for yellows vs reds?" |

| Observability & Audit | Full trail of inputs, tool calls, outputs, approvals | Regulation, customer confidence, internal QA | "Can we reconstruct a decision in minutes?" |

| Drift & Risk Monitors | Detects behavior shifts, data distribution changes, guardrail hits | Prevents quiet degradation and abuse | "How do we know when the system is changing under us?" |

| Review Workbench | UI for adjudication with manifests, markers, and quick actions | Enables rapid human verification | "Do reviewers decide in clicks, or re-investigate from scratch?" |

Two Escalation Paths

Not all escalation from a control plane is the same. A resilient control plane should separate Production Gating from Governance & R&D Feedback — and instrument for both.

Production Gating: "Can this AI output ship to a customer right now?"

| Purpose | Decide if a specific AI output or action can be accepted. |

| Trigger Sources | Policy hits, uncertainty thresholds, missing evidence, risk-tier escalation. |

| Actors | On-call approver, subject-matter reviewer, and product owner. |

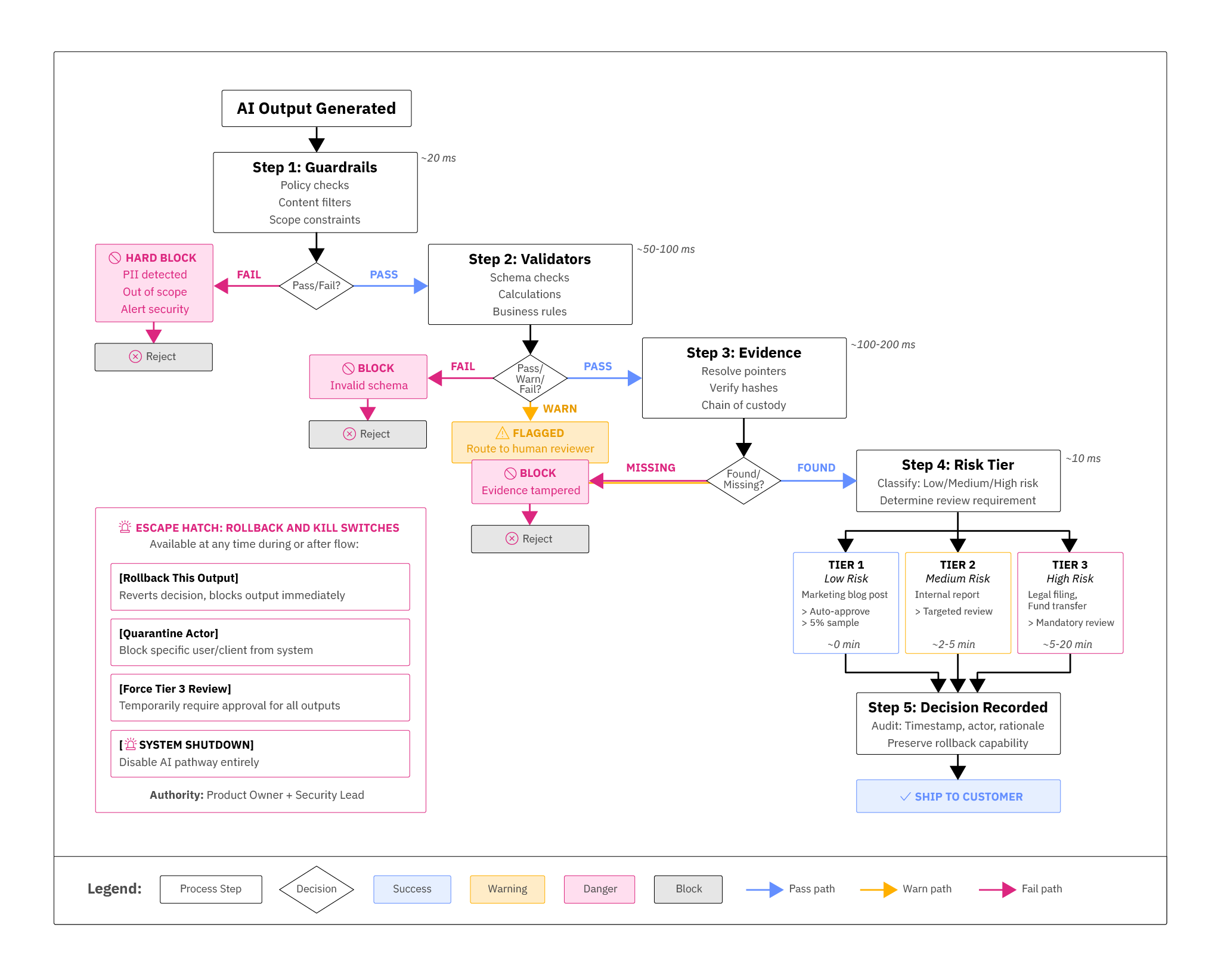

| Canonical Flow |

Production gating is essentially about now. It keeps bad outputs from shipping to customers and good outputs from being untrustworthy.

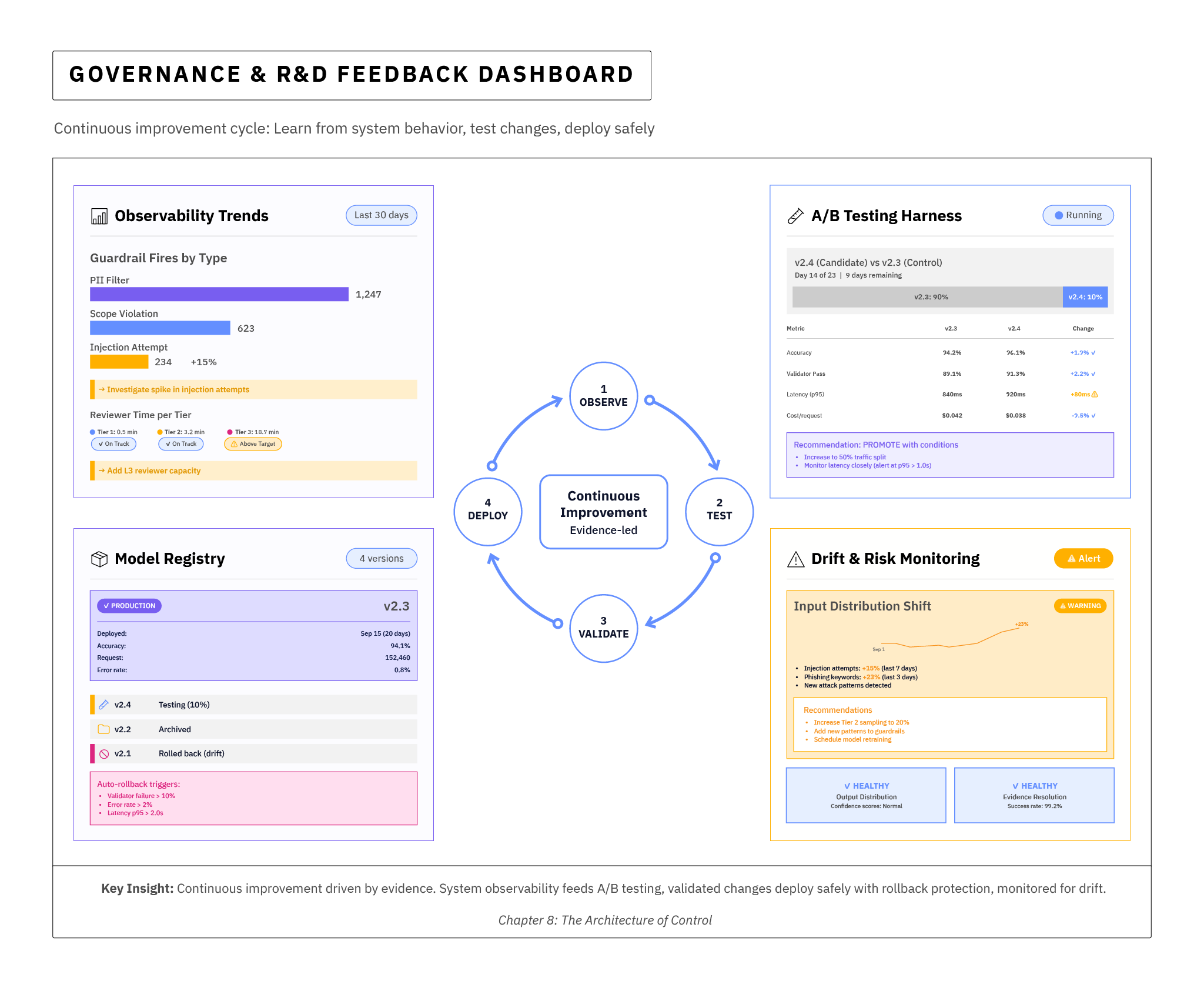

Governance & R&D Feedback: "What should we change long-term?"

| Purpose | Improve the model system by learning from its behavior. |

| Trigger Sources | Guardrail fire rates, drift metrics, A/B results, reviewer comments. |

| Actors | Model owners, alignment/safety engineers, leadership, governance. |

| Canonical Loop |

This loop ties the control plane into your ongoing R&D cycle so development choices about AI model effectiveness and alignment become nuanced and evidence-led at the lowest levels, not made on gut instinct.

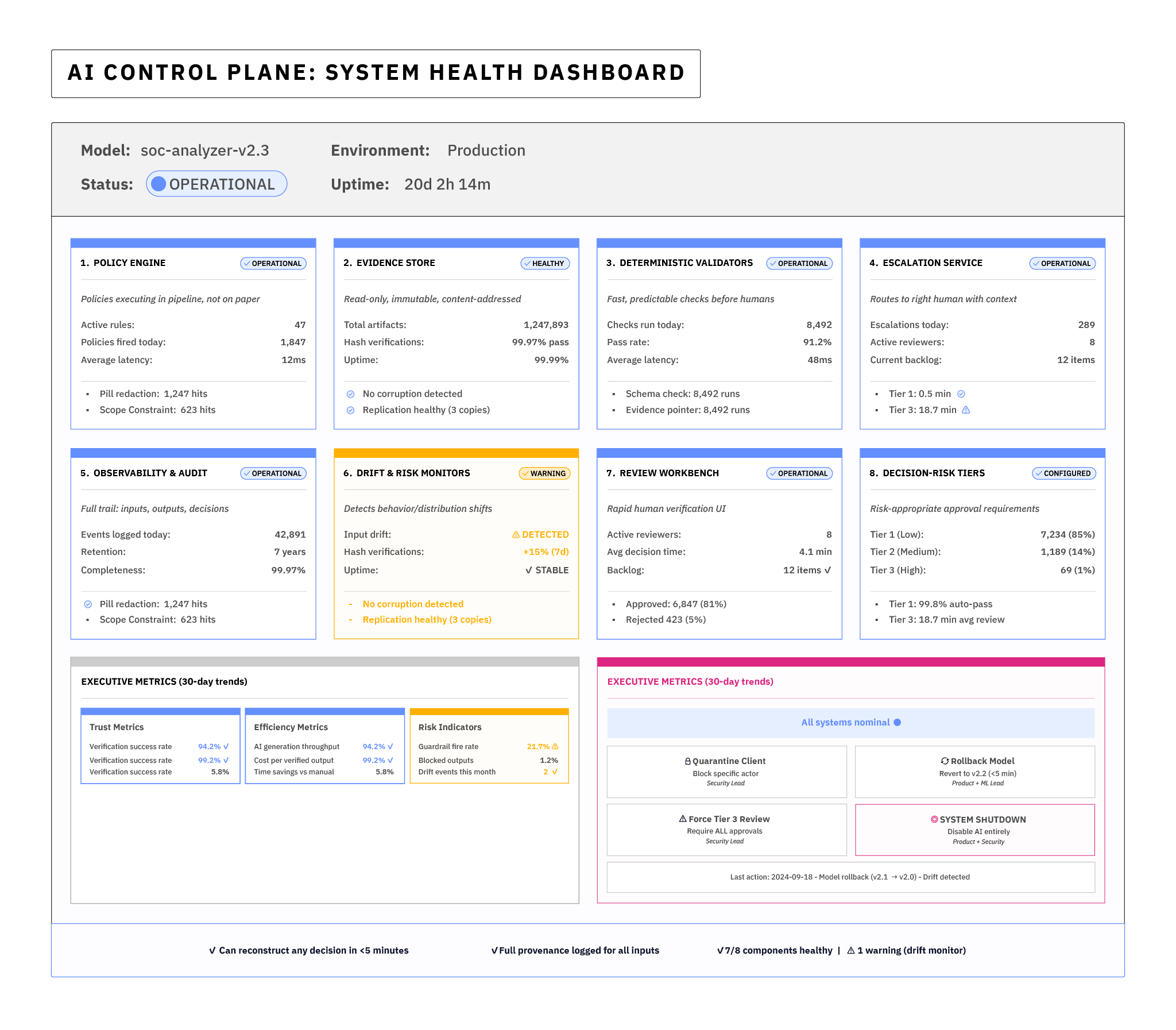

Core Component View of the Governance Control Plane

Above: Example Governance Pane Mock-up

Observability & Telemetry

Capture: inputs, outputs, redactions, guardrail hits, model & policy versions, latency, and evidence pointers (content IDs/URLs with signatures). Store immutably; respect privacy by default (PII hashing/tokenization). Make reason and risk visible, not just result.

Evidence Registry (Externalized)

Principle: The AI never authors its own proof. It outputs pointers to evidence held in a tamper-evident store the model cannot modify. Think content-addressable storage with cryptographic signatures and retention policies.

- Resolve on verification, not during generation. The verification UI pulls and renders evidence outside the generation loop.

- Chain-of-custody: who uploaded, when, from where; hash + signer identity.

- Health checks: stale/missing evidence invalidates the output automatically.

Alignment Guardrails & Policy Engine

Declarative policies bound to personas and tasks: allowed data scopes, forbidden instructions, output schemas, safety filters, and domain constraints (e.g., "no clinical advice without source pointer + Tier 3 sign-off"). Evaluate before and after model calls, with fast fail.

Deterministic Validators

Anything that can be checked without a model should be: math, format, ontology, regulatory rules, pricing logic. These are your truth rails. They are predictable, testable, and auditable. The model proposes; the validators verify.

Decision-Risk Tiers & Gates

Codify tiers (illustrative):

- Tier 1: Low risk (marketing blog), 0–5% sample to human, ship on green.

- Tier 2: Moderate risk (internal financial memo), validator-complete + targeted review.

- Tier 3: High risk (clinical/legal actions, fund transfers), mandatory human approval with full evidence views and attestation.

Gates enforce the minimum bar per tier and route exceptions.

Human Review Ladders

Human review ladders ≠ "throw it over the wall." Humans must get tools: diff views, evidence panel, validator results, risk explanation, and "send back with reason" macros. L1 handles routine exceptions; L2/L3 are Subject Matter Experts (SMEs) for complexity or impact.

Escalation, Rollback, & Kill Switches

Every action is feature-flagged. If drift or abuse is detected, you can:

(a) quarantine what's invoking the system (e.g.: a client, external agent, etc.),

(b) roll back to last-known-good policy/model (e.g.: undo a block placed by an AI in a cybersecurity flow),

(c) force Tier 3 review for a period (e.g: when the AI is under direct attack in a client environment),

(d) shut down the pathway entirely (disable service when under a resource-exhaustion attack).

Attest who pulled which lever when.

Provenance, Audit & Attestation

Immutable logs of who/what decided, on what data, using which versions, with which guardrails, and the evidence pointers that justified it. Attestations (human and system) make accountability real — and defensible.

How Control Loops Look in Practice

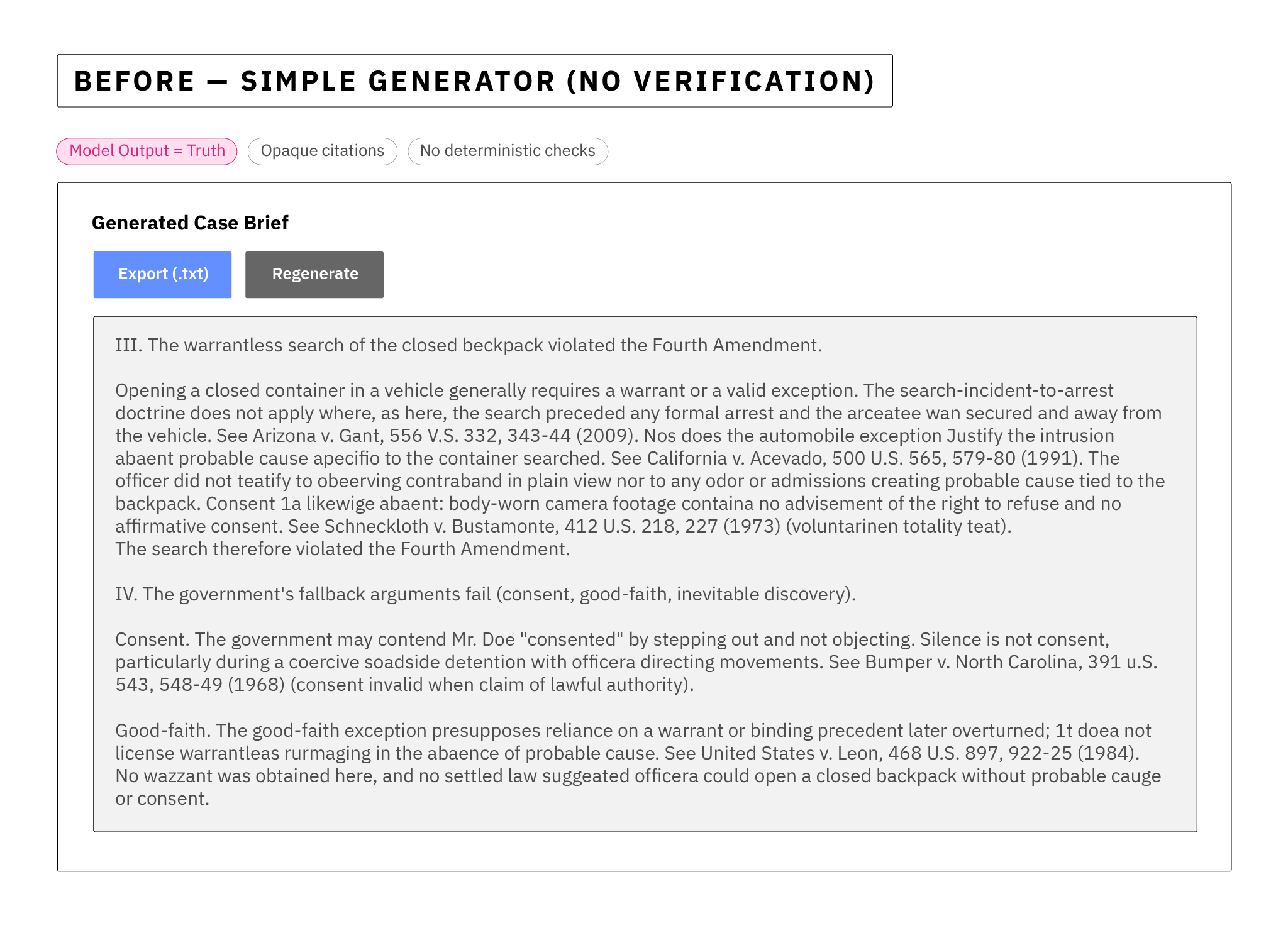

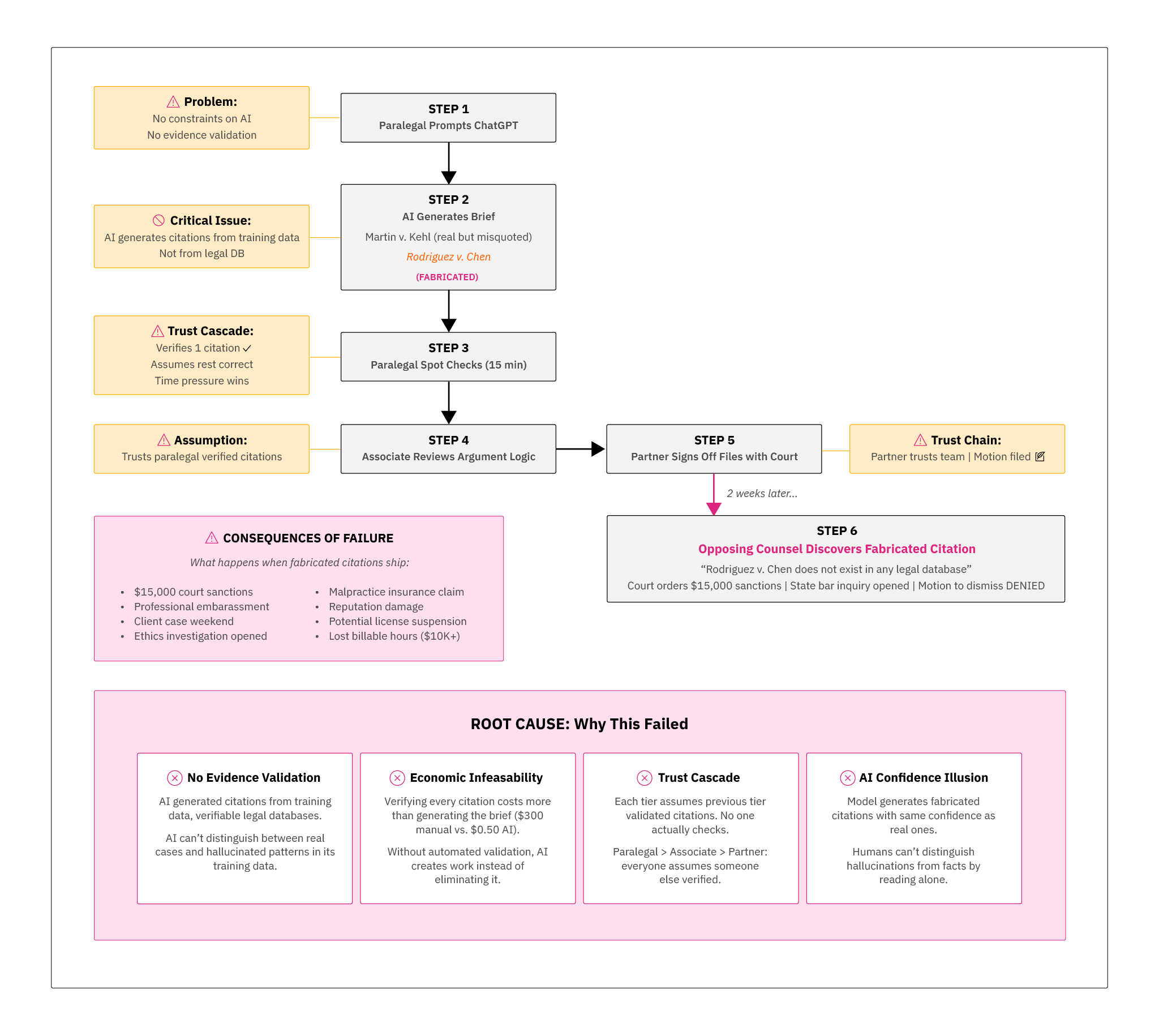

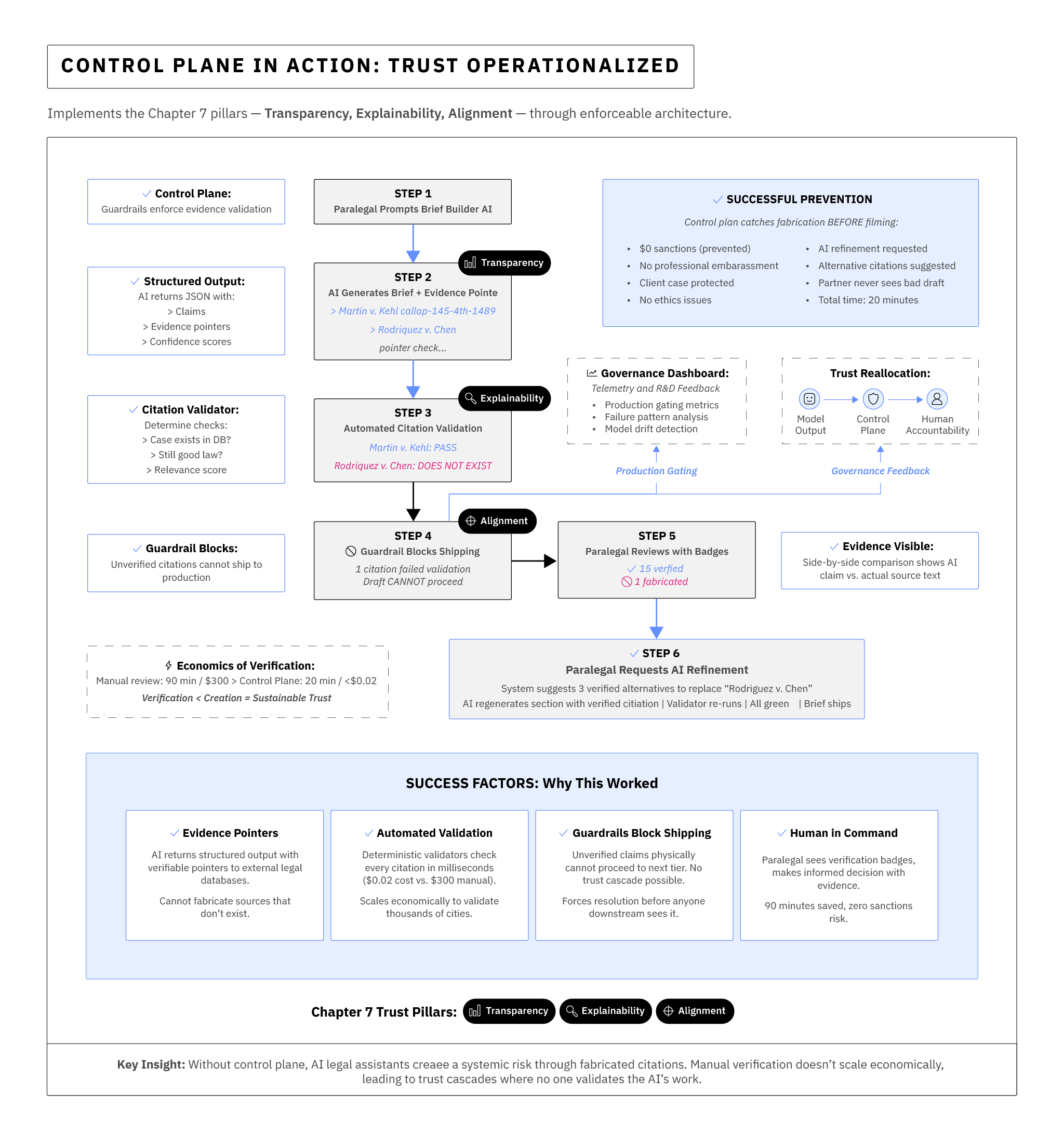

Legal Assistant — catching fabricated citations by design

Let's go back to our legal assistant example from the last chapter, but with a greater focus on the process flow the team experiences using the tools. I'll repeat the visuals for the tools here for easy refreshing, but this time we are focused on breaking down the processes running underneath the surface while using them.

Above: Recall the tool as experienced by the legal team before a control plane is implemented.

Above: Recall the tool as experienced by the legal team before a control plane is implemented.

No Control Plane: In this stage, AI bots produce crisp memos with case citations in one go. Reviewers have no structured way to spot fakes without re-researching everything. In practice, what happens is they gut check the AI's output for plausibility, and either accept or repeat the entire process to draft a memo to verify it. Look at the flow here to see the various problems and trust cascades that result.

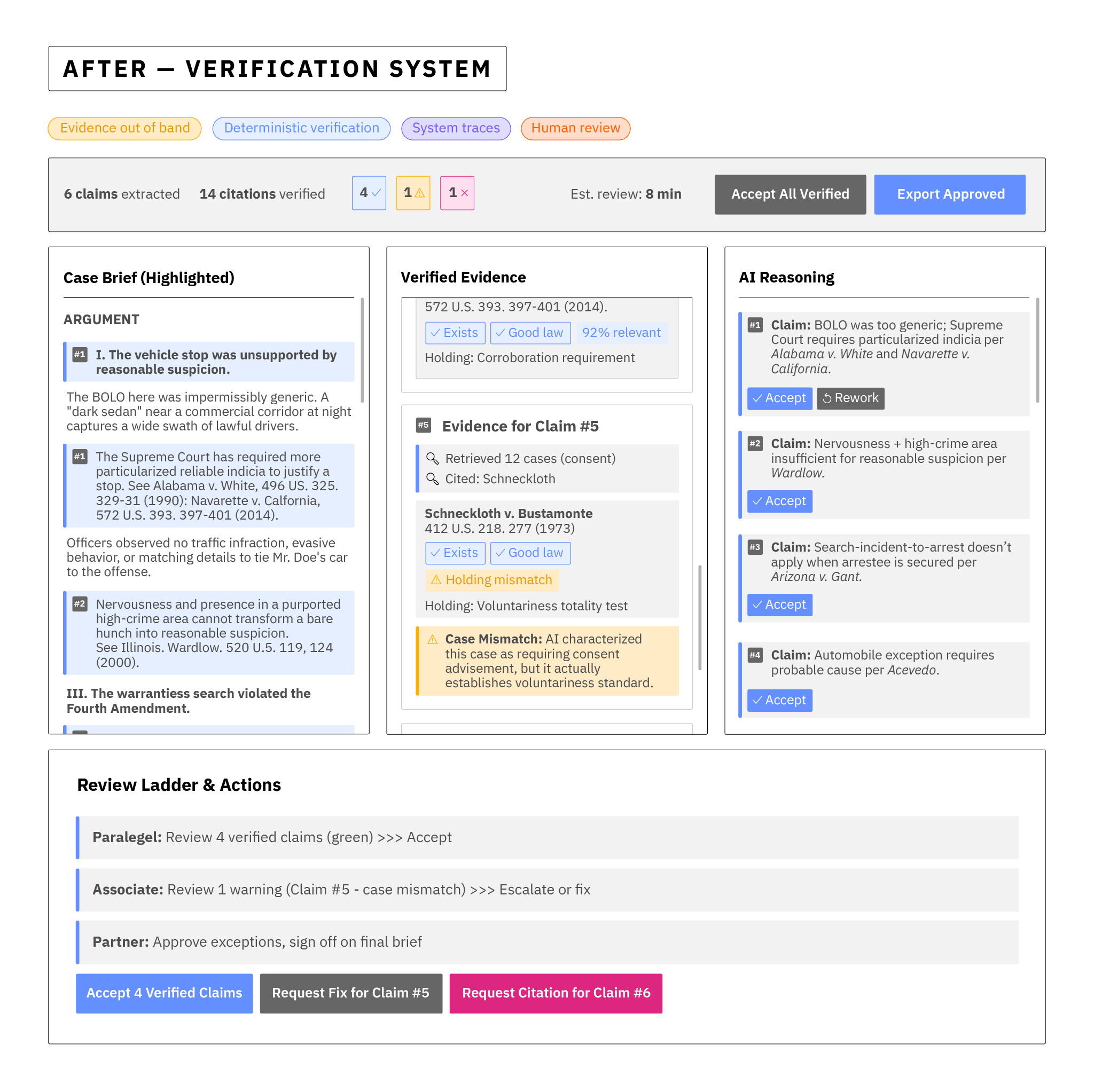

Now recall what the same tool looked like after the control plane was implemented:

The flow that follows shows how the control plane resolves the practical issues we faced earlier—and how it reframes the operator's role around judgement and verification as their new core function.

Why it works: Trust is firmly and clearly moved to the evidence system and validators, not the model's prose. Humans use newly crafted deterministic software tools for enhancing validation around the AI, which allow them to focus human effort only where automated verification fails or cannot be done, not on redoing the entire brief. But they also get queues that show them that trust is being earned - and the tools red flag when it isn't. This prevents trust cascades or fall-back during time crunches into blindly trusting the model's outputs.

Design Principles — and Their Mirror-Image Traps

| Principle (What good looks like) | Why it matters | Anti-pattern ("When a control plane isn't one") |

|---|---|---|

| Enforcement as code — policies execute in the pipeline | Paper policies don't stop packets | "Policy PDF on SharePoint; nothing enforces it." |

| Pointers over prose — evidence referenced, not authored | Models can fabricate; stores can't | "The AI explains itself with quotes it wrote." |

| Deterministic checks before engaging humans | Cheap, consistent, fast failure | "Humans go find what a regex could have." |

| Escalation by design — yellow routes to the right reviewer | Humans spend time on judgment, not hunting | "Dashboards light up; nobody knows who acts." |

| Observability everywhere — retrieval manifests, tool logs, approvals | You can reconstruct decisions quickly | "We have a dashboard; we cannot tell what happened." |

| Risk-aware gates — high-risk outputs can't bypass checks | Prevents catastrophic leakage/actions | "Everything is allowed; we 'monitor' later." |

| Drift watch — detect model performance change before it hurts you | Quiet degradation is the common failure | "Accuracy looks fine; distribution is just different." |

| Human workbench — verify in clicks, not hours | Verification becomes economical | "The human redoes the whole workflow to be safe." |

Reviewing the Economics of Trust

All the examples in this chapter help arm you with hard evidence against the persistent myth that verification by humans cancels AI's gains. That argument only holds if "verification" means forcing a human to manually reproduce the entire work product. With a well-designed control plane, it no longer applies.

In the SOC, validating artifact pointers and timeline alignment now takes minutes; writing an investigation from scratch takes an hour. In legal, checking clause fingerprints is near instant; redlining by hand is not. In healthcare, enforcing contraindication rules is automatic; re-researching literature is not. With deliberate investment during AI transformation—retiring legacy roles and workflows, then rebuilding around verification outputs within a control plane—human effort shifts from manual production to high-leverage validation, making it far more cost-effective. This is how you preserve the productivity dividend from AI without compromising trust. In economical terms, it's how you create a differentiated value add on top of AI's cheap, ubiquitous outputs - what would otherwise just be near free AI slop.

Integration: Where the Control Plane Lives

Because this enterprise architecture rethinks the core reason you even have human roles in your enterprise, it cannot be a bolt-on. It threads the whole business lifecycle — integrated with your data pipelines, model registry, deployment harness, and operations center (AIOps/SOC/NOC). It must give AI automation engineers the observability to see model drift, to A/B test models with confidence, and to ship safely — and it must also give executives and customers the assurance that trust in your outputs was carefully engineered and built with the same rigor as any mission-critical system.

Cost & Value: The Business Case in One Sentence

Point AI at work that is cheap to verify and costly to produce by hand. Engineer the control plane so your verification work is fast and defensible. Avoid areas where verifying equals solving unless you can decompose verification into easy checks. Since verification is where value accrues, pick problems that are inexpensive for you to verify and expensive for competitors. Your verification stack is now your product, and the only moat safeguarding the value of what is otherwise the near free output of AI generation.

Bringing it all together

You can't earn trust by promising that your model is special and somehow unique to the underlying limitations of generative AI, no matter how many times it performed well in production. You can only earn it by showing that your system protects your customers when the data is messy, the incentives are skewed, and when the AI has gone rogue and is actively trying to break your product or service. The tool for earning that trust is the control plane.

With this architecture, your verification work becomes a competitive advantage — the fastest way to unlock speed without adding the new risk: spraying AI slop everywhere and devaluing your product in the eyes of your customers, regulators, and brand. In the SOC, in clinics, in law firms, and even in marketing, the pattern holds: AI does the work; but a control plane makes it trustworthy; and people must still own and be accountable for the outcomes that matter.

Next, we turn to how that impacts the human side — the new accountability work that is emerging from the AI disruption. If this chapter was how you do the wiring for a successful intelligent enterprise, the next is the heart. We'll define the roles, the competencies, and the practices you must emphasize in your organizational culture to turn the technical guardrails into good decisions. After that, we'll discuss how to assign friction intelligently with decision-risk tiers and review ladders, so that speed and safety stop fighting and start compounding.

Trust, at scale, is never free. But with the right control plane, it can be far cheaper than it initially looks — and it's the only way to keep your outputs valued in the days of free generation.